Enterprise AI Data Catalog Platform That Eliminates Data Chaos

AI-powered discovery, governance, and observability unify across your data estate to deliver data quality, compliance, and AI readiness.

Trusted by enterprise data teams around the world

Your data catalog wasn’t built for this.

Traditional catalogs were built for batch reporting and static warehouses. Not streaming data, complex data stacks, or production AI.

The cost shows up everywhere:

How DataHub solves what legacy catalogs can’t

Discovery in seconds, not days

Accelerate discovery with conversational search and automated documentation

Compliance that runs itself

Turn hours-long investigations into rapid resolution with cross-platform data lineage and AI-powered debugging

Governance without the overhead

Maintain compliance at scale with continuous monitoring and AI-driven quality checks

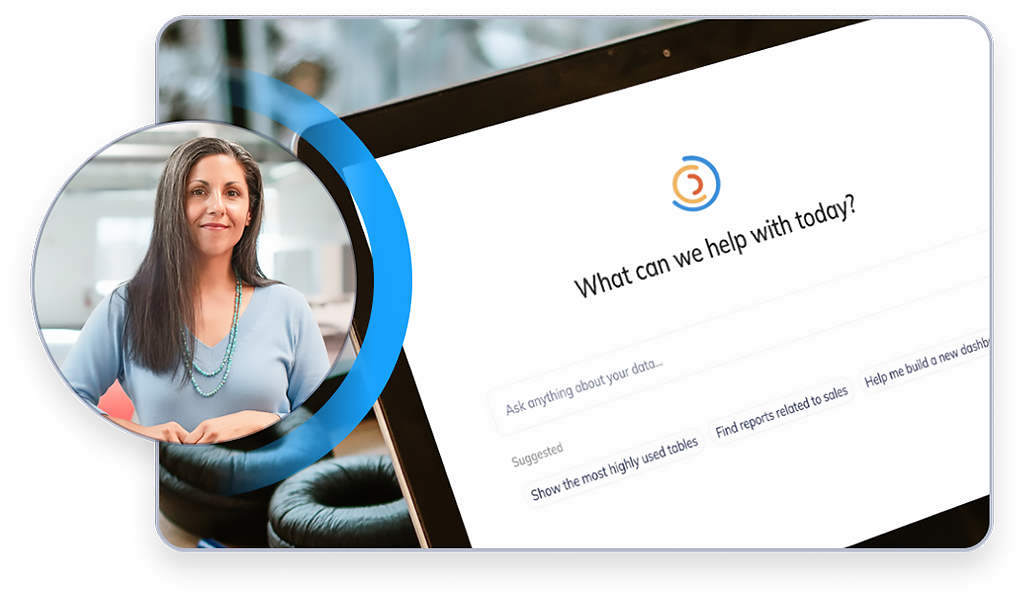

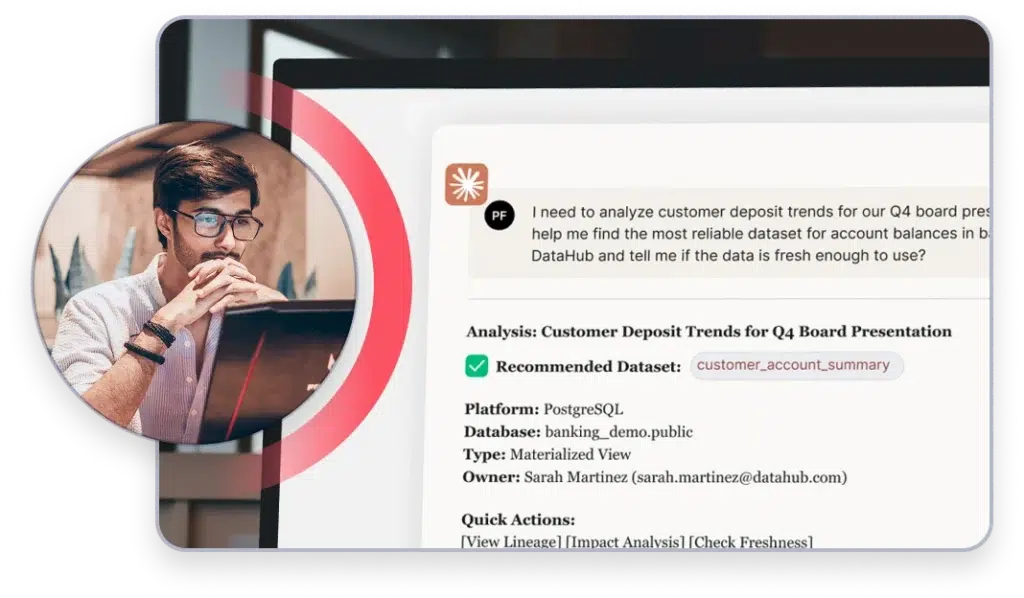

Discovery

Break down data silos with conversational data discovery. The Ask DataHub chat agent finds trusted data through natural language questions.

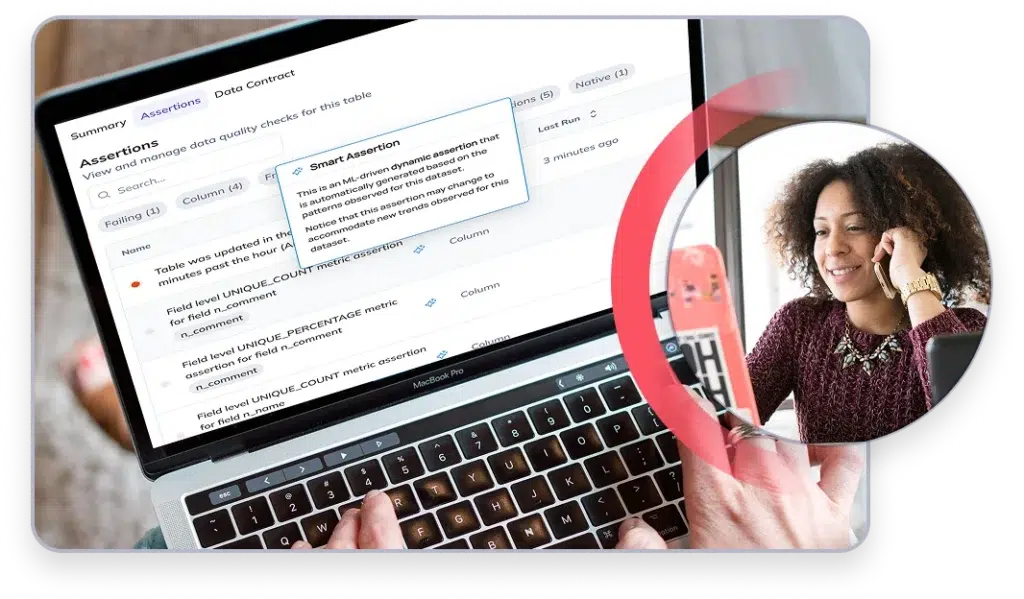

Observability

Detect and resolve quality issues before they impact production. Automated anomaly detection and quality checks keep data reliable.

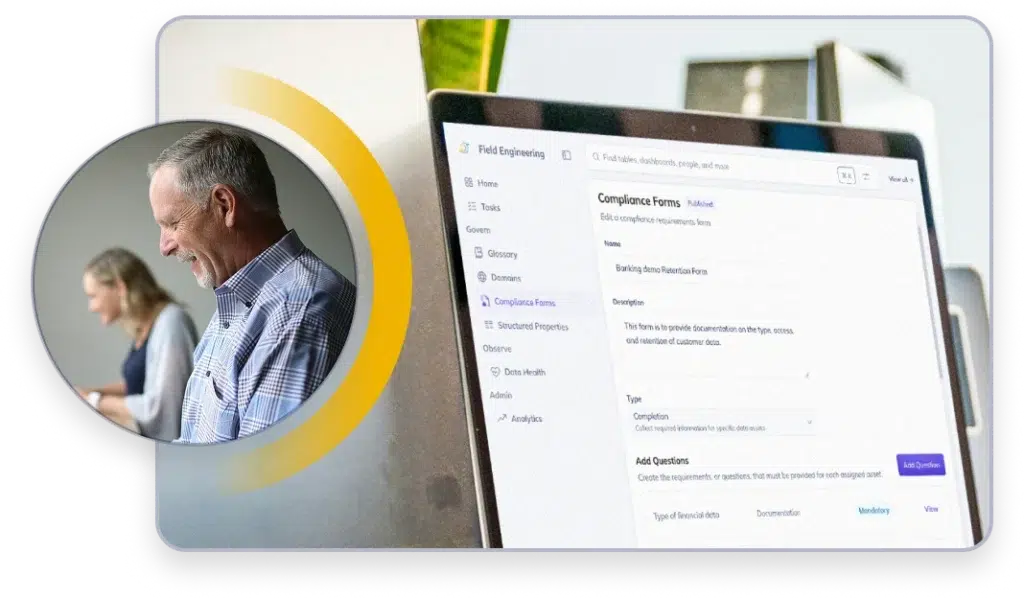

Governance

Operationalize AI readiness with automated compliance. Set metadata tests, track certification workflows, and maintain enterprise-wide visibility into data health.

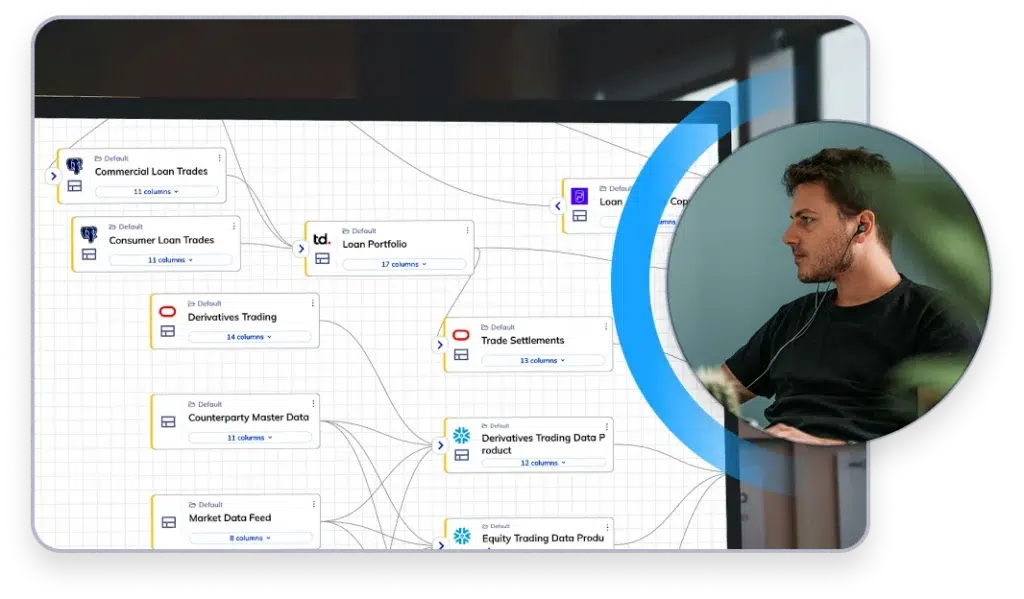

Lineage

Understand the true impact of changes before you make them. Column-level lineage traces data flows from source systems through transformations to downstream AI models and business applications.

AI + Automation

Free your team from repetitive metadata management tasks. AI documentation generation, intelligent glossary classification, and a hosted MCP Server automate data catalog maintenance.

Real results from DataHub customers

ENTERTAINMENT/MEDIA

Netflix unifies discovery for AI-ready operation

Netflix unified discovery across data, ML, and software assets to eliminate siloed internal knowledge across a growing data estate. Cross-domain lineage enables proactive incident prevention while self-serve governance maintains standards at scale.

FINANCIAL TECHNOLOGY

Chime breaks down barriers to accelerate innovation

Organizational silos separated Chime’s data producers from data consumers, hiding data issues and impacting business insights. Now, cross-platform lineage establishes clear ownership, continuous monitoring catches quality issues early, and unified metadata enables cross-team collaboration.

Built on proven open-source innovation

#1

Open-source data catalog worldwide

3M+

Monthly PyPI downloads

3,000+

Organizations using DataHub

14,000+

Community members collaborating globally

Ready to see DataHub Cloud in action?

See how DataHub Cloud transforms enterprise data management with AI-powered discovery, intelligent observability, and automated governance for the AI era.