What is Data Discovery?

Tools, Techniques & Use Cases Explained

Quick definition: Data Discovery

Data discovery is the process of identifying, cataloging, and understanding data assets across an organization’s ecosystem. Modern data discovery goes beyond simple search to include automated metadata collection, semantic understanding of data meaning and relationships, impact analysis through lineage tracking, and validation of data quality and compliance status—all delivered through conversational interfaces embedded in existing workflows.

Data analysts, developers, and data scientists often spend more time searching for data than analyzing data and building with it. This isn’t because they’re inefficient; it’s because the discovery methods that worked when you had a few data sources become unmanageable when you have dozens or 100s of sources.

Traditional data discovery relies on:

- Keyword searches

- Manual documentation

- Institutional knowledge about where critical datasets live.

But this approach collapses under the weight of complex data ecosystems—cloud warehouses ingesting terabytes daily, streaming platforms processing millions of events per second, ML feature stores updating continuously, and legacy systems.

Data discovery in 2026 isn’t just about finding tables. It’s about understanding context across your entire data-to-AI pipeline:

- Which version of data trained which version of a model?

- Where PII exists at the column level for GDPR compliance?

- What breaks if you modify a transformation?

- Whether an AI system is using validated, compliant training data

Discovery has evolved from “Google for data” (keyword search through static inventories) to “ChatGPT for data” (conversational interfaces that understand intent and provide answers with full context).

Why is data discovery important today?

The convergence of AI adoption, regulatory complexity, and architectural decentralization has transformed data discovery from a productivity enhancer into strategic infrastructure.

Scaling AI beyond pilots requires validated data

AI and ML systems require validated training data with documented provenance. Before models reach production, data teams must answer questions that traditional discovery tools weren’t built to handle:

- What data trained this model?

- Which version of the customer dataset fed v2.3 of our recommendation engine?

- If we retrain with updated data, can we reproduce the original results?

- Does this model use compliant data sources?

These aren’t optional questions for enterprises scaling AI beyond pilots.

Regulatory frameworks demand column-level visibility

Regulatory compliance frameworks like GDPR and CCPA demand precise visibility into where sensitive data lives: Not “somewhere in this 200-column table” but exactly which columns contain PII, how that data flows through transformations, and which downstream systems consume it. Column-level discovery has shifted from nice-to-have to mandatory for organizations operating in regulated industries or markets.

Modern businesses demand self-service data access

Speed expectations have fundamentally changed:

- Business stakeholders expect self-service access to trusted data for advanced analytics, not ticket queues and multi-day wait times

- Data scientists expect to validate training data compliance programmatically before starting expensive jobs

- Analysts expect to understand data quality and freshness before conducting data analysis or building dashboards

Traditional discovery approaches that require hunting through documentation or asking internal knowledge experts don’t meet these velocity requirements.

Why most traditional data discovery approaches fail

| Traditional Discovery | Modern Discovery (DataHub) | |

| Search method | Keyword matching against table/column names | Conversational search that understands intent and meaning |

| Metadata collection | Manual documentation, scheduled batch scans | Automated ingestion from 100+ sources, real-time event processing |

| User interface | Separate catalog tool requiring context switching | Conversational interfaces embedded in Slack, MS Teams, BI tools, query editors |

| Lineage visibility | Table-level, often incomplete | Column-level across entire data supply chain |

| AI/ML support | Limited to data assets only | Unified discovery across data, models, features, training datasets |

| Documentation | Manual, quickly becomes stale | AI-generated, automatically enriched from lineage and metadata, and customizable to organizational standards |

| Trust signals | Manually curated or absent | Usage-driven intelligence shows popular, trusted datasets |

| Access control | Coarse-grained or manual | Automated, granular controls at column/row level |

Most organizations still rely on discovery methods designed for a world that no longer exists: One of relatively static data warehouses updated on batch schedules, analysts who knew SQL and table naming conventions, and data teams small enough that someone could remember where critical datasets lived.

The keyword search guessing game

Traditional catalogs treat discovery as keyword matching against table and column names. This works fine if you know the exact naming convention. It fails catastrophically when you don’t. Is customer contact information stored in cust_email, customer_contact, user_email_address, or contact_info?

Data teams waste hours guessing variations, hoping one matches. Multiply this across hundreds of tables with inconsistent naming standards across teams, and discovery becomes like an archaeological excavation rather than the straightforward search it should be.

Manual documentation doesn’t scale

Manual data discovery approaches assume humans will document datasets: Writing descriptions, tagging business terms, noting data quality issues, explaining transformations.

This breaks down immediately when:

- Documentation goes stale within weeks as pipelines change faster than teams can update catalogs

- High-value datasets used constantly never get documented because the experts using them are too busy to write about them

- Low-value datasets get extensive documentation from teams trying to justify their existence

Then, the correlation between documentation quality and data value inverts.

Built for batch, breaking under real-time

Most traditional discovery tools ingest metadata on schedules: Nightly, weekly, or when someone remembers to trigger a scan.

This worked when data warehouses updated overnight. It fails when Kafka topics stream continuously, when feature stores update in real-time, or when ML models demand streaming data for accurate predictions. By the time the catalog reflects reality, that reality has changed. Data engineers troubleshoot pipeline failures with stale lineage information. Governance teams enforce policies on data they don’t know exists yet.

Fragmented tools create blind spots

Organizations typically handle discovery, data governance, and observability as separate concerns requiring separate tools. You:

- Search for data in the catalog

- Check quality in your observability platform

- Verify compliance in your governance tool

Each system maintains its own metadata, its own lineage graph, its own understanding of data relationships. When a quality issue impacts a compliance-critical dashboard, no single system connects those dots. Teams waste hours manually tracing dependencies across fragmented tools, and they still miss the full picture.

Can’t support AI workflows

Traditional catalogs excel at one thing: helping data analysts find tables to query. They struggle with everything AI systems need. Where’s the lineage connecting training datasets back through feature engineering to raw events? Which models consumed this data, and what was their performance? What transformations were applied, and do they introduce bias? Has this data been validated against AI readiness criteria? These questions block AI from moving beyond pilots into production, and legacy discovery tools weren’t architected to answer them.

“Discovery tools built for human keyword searches can’t support autonomous AI agents that need to validate governance requirements programmatically, or data scientists who need to debug data quality issues in upstream tables.”

– John Joyce, Co-Founder, DataHub

Seven improvements that modern discovery platforms deliver

Next-generation discovery eliminates the manual work, guesswork, and fragmentation that make traditional approaches unsustainable at scale. The shift isn’t just an incremental improvement in existing methods; it’s an architectural change that enables fundamentally different capabilities.

Modern data discovery tools like DataHub deliver these capabilities through an Enterprise AI Data Catalog built for the AI era. While legacy catalogs treat discovery as static keyword search in standalone tools, DataHub is a context platform that delivers conversational, semantic discovery embedded in workflows where teams already work, with unified visibility across data and AI systems.

Here’s what sets modern platforms like DataHub apart:

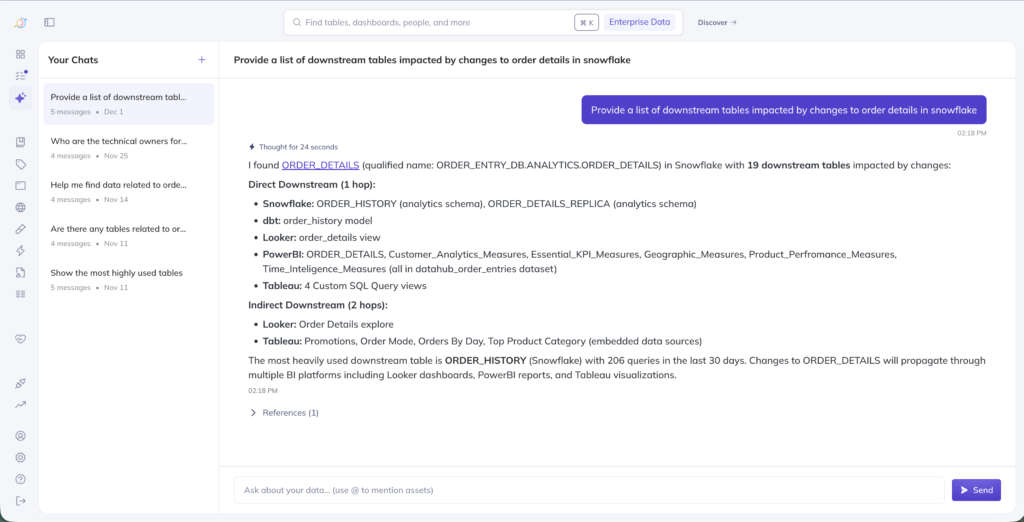

1. Conversational discovery with AI-powered search

Natural language interfaces eliminate the need to understand catalog-specific search syntax or remember exact table names. Users ask questions in plain English: “Show me all tables with customer PII,” “What’s the freshness of our revenue data?”, or “Which dashboards use the orders table?” The system converts these questions into structured searches, generates SQL queries when relevant, and enables conversational follow-ups—whether in the DataHub interface itself or embedded in tools like Slack and Teams.

Behind this conversational layer sits semantic search that understands intent and meaning, not just literal keywords. When you search for “contact information,” it surfaces email addresses, phone numbers, Slack handles, and mailing addresses—even when those exact terms don’t appear in table or column names. This works through AI and machine learning models trained to understand data relationships and business context.

Semantic search also learns from usage patterns to identify patterns that surface the most relevant data. When multiple users search for “customer revenue” and consistently click on the quarterly_sales_summary table, the system learns that association and ranks it higher for similar future queries. This collective intelligence surfaces the datasets people actually use, not just the ones with keyword-rich documentation.

AI-powered classification detects and tags sensitive data types (like PII, financial information, health records) without manual review of every column. Machine learning models automatically generate documentation by analyzing transformation logic, popular queries, and usage patterns. Descriptions that would take hours to write manually appear automatically, capturing not just what data is but how it’s actually used. Once generated, documentation propagates automatically through column-level lineage—so a description written for a source column flows downstream to all dependent columns, eliminating redundant documentation work while maintaining consistency across your entire data pipeline.

This conversational approach democratizes discovery, enabling teams to extract meaningful insights from data without technical barriers.

Non-technical users who would avoid traditional catalogs because they feel like developer tools can now find data by asking questions. Analysts don’t need to know your naming conventions. Business stakeholders can validate data sources themselves rather than filing tickets.

“We added Ask DataHub in our data support workflow and it has immediately lowered the friction to getting answers from our data. People ask more questions, learn more on their own, and jump in to help each other. It’s become a driver of adoption and collaboration.”

– Connell Donaghy, Senior Software Engineer, Chime

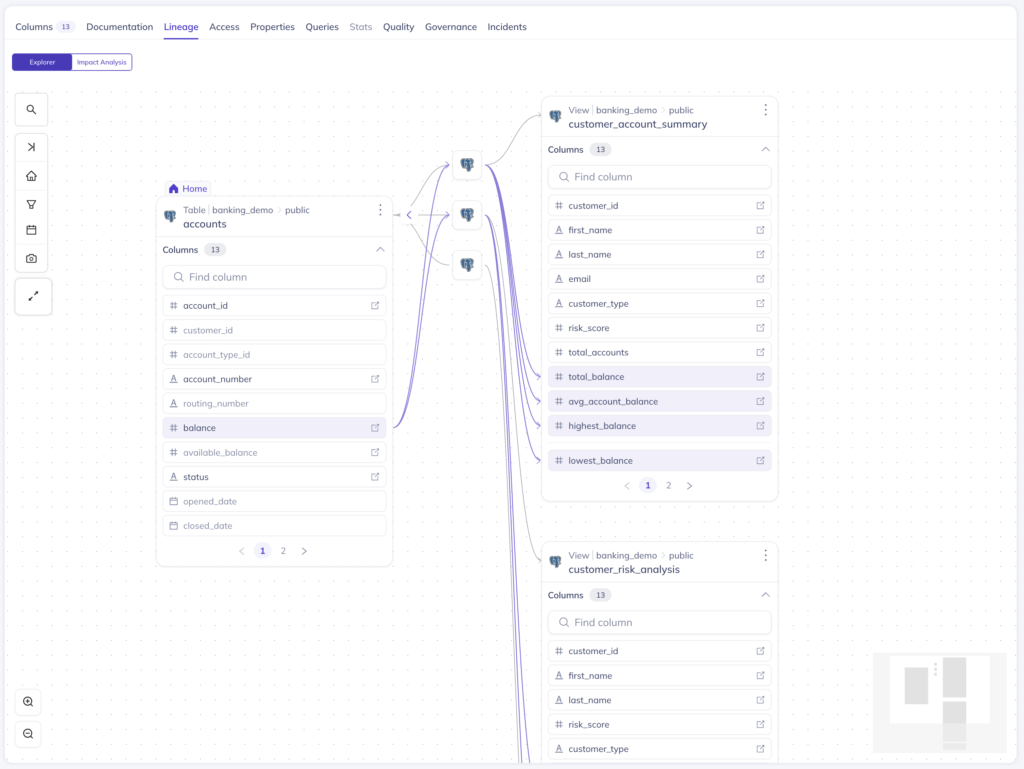

2. Interactive lineage as discovery infrastructure

Data lineage shows how data flows from source systems through transformations to final consumption in dashboards, reports, and ML models. But lineage isn’t just documentation; it’s discovery infrastructure that answers questions static catalogs can’t.

Column-level lineage traces individual fields through transformations, not just table-to-table relationships. This precision matters for compliance (exactly which columns contain PII and where do they flow?) and for impact analysis (what breaks if I change this specific field?). When analysts ask “Is this the source or a copy?”, lineage provides definitive answers in seconds rather than requiring days of investigation.

Interactive lineage graphs provide data visualization that lets users explore relationships visually, filtering by system type, time range, or specific assets. Multi-hop lineage spans the entire data supply chain (from Kafka events through Spark transformations to Snowflake tables to Looker dashboards to SageMaker models), showing dependencies across platforms that separate tools can’t connect.

The business impact shows up in incident response and compliance validation. Robinhood leveraged column-level lineage to satisfy GDPR requirements for data supply chain visibility, enabling their expansion into European markets.

3. Discovery for data and AI systems

As organizations scale AI and ML beyond pilots, discovery must extend beyond traditional data assets to include models, features, training datasets, and the relationships between them. This unified visibility across data and AI infrastructure answers questions that traditional catalogs weren’t designed to handle.

Model lineage tracks relationships between datasets, ML models, and feature stores, showing:

- Which data trained which model

- Which features were active

- What the data quality metrics were at training time

When model performance degrades, teams need to understand whether data drift or model changes are responsible. Without lineage connecting models back to training data versions, this analysis requires manual investigation.

Data versioning for ML pipelines tracks which snapshot or version of training data fed each model version. This matters for reproducibility (can we recreate the exact training conditions?) and debugging (what changed between model v2.2 and v2.3?). When regulators ask “what data trained this model?”, organizations need definitive answers with audit trails, not approximations.

End-to-end traceability spans from raw data sources through transformations and feature engineering to model training to production AI features. This visibility enables impact analysis when data sources change (which AI features break?) and compliance validation before models deploy (is this model using approved data sources?).

Apple uses DataHub with custom entities and connectors to support context management for data and AI assets across their ML platform, demonstrating that enterprise AI at scale requires unified discovery across the full data-to-AI pipeline.

4. Automated, real-time metadata collection

Modern discovery platforms automatically extract metadata from 100+ data sources (including Snowflake, BigQuery, Databricks, dbt, Airflow, Looker, Tableau, Kafka, and more) without manual cataloging. Connectors continuously sync metadata as data ecosystems evolve, keeping discovery current without human intervention.

This automated data collection extends beyond basic schema information to:

- Operational metadata (query patterns, performance metrics, error rates)

- Usage metadata (who’s accessing what, when, how often)

- Quality metadata (freshness, completeness, distributions)

The discovery layer stays fresh automatically, reflecting the current state of your data landscape rather than a snapshot from last week’s manual update.

“Manual data management doesn’t scale when you’re cataloging millions of assets across hundreds of systems. AI-powered classification and automated ingestion aren’t optional features—they’re the only way to maintain discovery quality at enterprise scale without requiring an army of data stewards.”

– Maggie Hays, Founding Product Manager, DataHub

Event-driven architecture processes metadata changes in real-time rather than on batch schedules. When a data engineer creates a new dataset in Snowflake, discovery platforms capture that event within seconds. Governance policies evaluate the new asset immediately. If it contains PII based on automated classification, access controls apply before anyone queries it—automatically, in production, and without human intervention.

This real-time model supports both human decision-making and automated pipelines. Data scientists see current quality metrics and usage patterns, not last week’s snapshot. ML training pipelines validate lineage and compliance programmatically before starting expensive jobs.

Foursquare improved data user productivity by reducing time-to-discovery and access from days to minutes through automated ingestion that eliminated the documentation backlog and kept metadata continuously fresh.

5. Usage-driven intelligence and curated data products

Modern discovery platforms track which datasets are queried most frequently, by whom, and for what purposes. This usage metadata surfaces as trust signals that deliver valuable insights in search results: Popular, frequently-accessed datasets with active owners rank higher than rarely-touched tables of unknown provenance.

Data profiling through usage patterns also identifies ‘zombie datasets’ consuming storage and compute resources but rarely or never queried. This intelligence enables confident deprecation of unused assets, directly cutting infrastructure costs. DPG Media saved 25% in monthly data storage costs after implementing usage-based discovery that revealed which data could be safely deleted.

The same usage tracking accelerates onboarding. New team members see which datasets their colleagues actually use, reducing the risk of building on stale or deprecated data. Instead of guessing which of 10 similar tables is “correct,” they see popularity and trust indicators that surface the right answer immediately.

Data products take this a step further by bundling high-quality datasets into discoverable collections organized by domain, use case, or business unit. These pre-vetted assets come with embedded quality standards, documentation, and access policies. Instead of discovering hundreds of customer tables and trying to determine which is “correct,” users find curated Customer 360 data products that domain teams have certified as reliable.

Data products establish clear ownership and SLAs. When issues arise, users know exactly who maintains the data and what response times to expect. This clarity accelerates both discovery (finding the right data) and usage (trusting it enough to build on it).

6. Discovery embedded where work happens

Discovery that requires leaving your workflow to search a separate tool creates friction that kills adoption. Modern platforms embed discovery context directly where teams already work, surfacing metadata in BI tools, exposing lineage during query writing, and enabling programmatic access for automated systems.

- Chrome extensions surface discovery metadata directly in BI tools like Looker and Tableau. Analysts see lineage graphs, quality scores, and ownership information inline while building dashboards—without opening a separate catalog. The context they need to make informed decisions appears automatically in their existing workflow, eliminating the “discovery tax” of context switching.

- API-first architecture means discovery integrates into custom workflows, CI/CD pipelines, and automated validation checks. Data quality gates in deployment pipelines query discovery metadata programmatically to enforce standards before code ships. ML training pipelines validate lineage and compliance before starting expensive jobs. This programmatic access enables discovery to function as infrastructure that automated systems depend on, not just a search interface for humans.

- AI agent integration through standards like Model Context Protocol (MCP) allows autonomous systems to discover datasets, validate compliance, check quality, understand lineage, and record their actions. As organizations deploy AI assistants and autonomous data agents, these systems need the same discovery capabilities humans do—but through APIs designed for machine consumption at machine speed.

“The biggest barrier to catalog adoption isn’t features—it’s friction. When data scientists have to leave their notebook, switch to a catalog UI, search, copy a table name, then return to continue working, they simply won’t do it. We designed DataHub’s embedding strategy around a simple principle: Discovery that requires conscious effort to access will always have an adoption problem. The only sustainable approach is making metadata appear automatically wherever work gets done.”

– John Joyce, Co-Founder, DataHub

This embedding strategy eliminates the adoption barrier inherent in standalone tools. When discovery context appears automatically where work happens, teams use it without conscious effort. The platform becomes invisible infrastructure rather than a separate destination.

7. Compliance-ready discovery with granular access controls

Search results automatically filter based on role-based access policies. Users only see data they’re authorized to access, preventing accidental exposure of sensitive information. Data security through access-aware discovery becomes essential as organizations democratize data access beyond trusted admins to broader analyst populations.

Access controls work at granular levels (table, column, or row-level) aligning directly with compliance requirements. The same column-level visibility that enables GDPR compliance (knowing exactly which columns contain PII) integrates with access policies to ensure only authorized users can discover and access sensitive data. A marketing analyst might discover aggregated customer segments while being blocked from individual PII. A data scientist might access training datasets while being restricted from production customer data.

This granular control enables compliant self-service at scale. Organizations can democratize data access without sacrificing governance, knowing that discovery respects regulatory boundaries automatically. The alternative—restricting discovery to a small group of trusted admins—creates the bottlenecks that modern discovery is designed to eliminate.

Buyer’s evaluation checklist

When evaluating enterprise discovery platforms, these architectural and operational factors determine whether a platform scales with your needs:

This checklist works both for initial platform selection and for validating whether existing tools meet evolving requirements. Platforms that excel on these dimensions become strategic infrastructure. Those that don’t become migration projects.

| Evaluation criteria | Why it matters | Tools You’re Considering | ||

| DataHub | Solution #2 | Solution #3 | ||

| Real-time vs. batch metadata processing | Batch scans create gaps where metadata lags reality, breaking automated workflows and governance | Event-driven architecture processes metadata changes in real-time, enabling proactive governance and current context for AI systems | ||

| Column-level vs. table-level granularity | Compliance (GDPR, CCPA) requires knowing exactly which columns contain PII, not approximations | Full column-level lineage, search, and governance across the entire data supply chain | ||

| Unified vs. fragmented architecture | Separate tools for discovery, governance, and observability create blind spots when issues span concerns | Single metadata graph unifying discovery, lineage, quality, and governance—context-aware governance impossible with fragmented tools | ||

| Data-only vs. data + AI coverage | Traditional catalogs handle data assets but can’t track models, features, training datasets, and their relationships | Enterprise AI Data Catalog covering data assets, ML models, feature stores, training data versioning, and end-to-end AI lineage | ||

| UI-only vs. API-first architecture | Platforms designed for human UIs can’t support autonomous agents and automated validation | Comprehensive APIs and hosted MCP Server enabling AI agents, automated governance checks, and integration into CI/CD pipelines | ||

| Proprietary vs. open-source foundation | Vendor lock-in creates migration risk when needs diverge from roadmap | Open-source core with 14,000+ community members, ensuring platform evolves with industry standards | ||

| Fixed vs. extensible metadata model | Rigid schemas force workarounds; fully custom schemas create maintenance debt | Schema-first design with extension mechanisms that preserve upgrade compatibility | ||

| Manual vs. automated metadata collection | Platforms requiring extensive manual documentation never achieve adoption | 100+ pre-built connectors with automated ingestion and AI-generated documentation—discovery layer populates itself | ||

| Integration ecosystem | Limited connectors mean manual work to catalog critical systems; connector maintenance becomes technical debt | 100+ pre-built connectors covering major platforms (Snowflake, BigQuery, Databricks, dbt, Airflow, Looker, Tableau, Kafka, etc.) with active community maintaining compatibility | ||

| Implementation support | Discovery platforms are infrastructure projects requiring expertise; poor implementation support leads to stalled rollouts | Enterprise support with implementation guidance, professional services available, extensive documentation, and active community support | ||

| Community and vendor stability | Proprietary platforms create dependency on single vendor; abandoned projects become technical debt | Founded by LinkedIn engineers who built the original DataHub; 14,000+ community members; adopted by Apple, Netflix, LinkedIn; backed by enterprise customers and open-source sustainability | ||

See DataHub in action

Implementing data discovery: DataHub’s top tips

Even architecturally superior discovery platforms like DataHub can’t deliver value if implementation fails in real-world data discovery use cases. Successful deployments follow patterns that respect organizational reality.

1. Start with high-value use cases, not comprehensive coverage

The impulse to catalog everything before declaring success kills momentum. Instead, identify specific pain points where better discovery delivers immediate, measurable value, like:

- Accelerating incident response through automated lineage

- Enabling self-service for a specific business unit

- Establishing AI readiness for production ML deployment

Each use case builds capability that supports the next. Start narrow, prove value, expand deliberately.

2. Let automation do the heavy lifting

Platforms that require extensive manual cataloging before delivering value never achieve adoption. Choose tools that automatically extract metadata from your existing systems (Snowflake, dbt, Airflow, Looker, whatever you actually use) without requiring teams to document everything first.

The discovery layer should populate itself and stay fresh through automation, not through unrealistic expectations that busy engineers will maintain documentation. Modern AI data catalogs can generate documentation automatically, including descriptions and business context, then propagate those insights through lineage so downstream assets inherit context without manual work. Similarly, discovery that lives in a separate tool requires behavior change that stalls adoption. Discovery embedded where work already happens (in Slack and Teams, in BI tools, in query editors) gets used automatically.

Adoption comes from making discovery invisible infrastructure rather than a separate destination requiring conscious effort to access.

3. Identify the metrics that really matter

Avoid vanity metrics like “number of assets cataloged.” Focus on outcomes that reflect business value, like:

- Time-to-discovery measures how quickly users find what they need

- Time-to-trust measures how long it takes a data consumer to go from discovering a dataset to confidently using it in production

- Incident resolution speed tracks mean time to resolution for data quality issues

These metrics connect discovery improvements to operational impact.

Modern discovery platforms lay the foundation for the autonomous data operations and AI systems that organizations are rapidly adopting. As AI agents increasingly handle routine data tasks, discovery evolves from a tool humans consult to infrastructure that machines depend on for validation, governance, and decision-making. The platforms that operate at machine speed, provide programmatic access for autonomous systems, and unify context across data and AI assets aren’t preparing for a distant future. They’re enabling the present that leading data organizations already inhabit.

Ready to future-proof your metadata management?

DataHub transforms enterprise metadata management with AI-powered discovery, intelligent observability, and automated governance.

Explore DataHub Cloud

Take a self-guided product tour to see DataHub Cloud in action.

Join the DataHub open source community

Join our 14,000+ community members to collaborate with the data practitioners who are shaping the future of data and AI.

FAQs

Recommended Next Reads