What Is a Data Catalog?

Understanding the Gen 2 to Gen 3 Shift

Quick definition: Data catalog

A data catalog is a centralized inventory that helps organizations find, understand, and govern their data assets. It collects metadata (information about your data’s structure, location, ownership, quality, and lineage) and makes it searchable. The data catalog serves data professionals and their teams, who use it to discover what data exists across the enterprise.

That definition still holds. But it fails to capture how dramatically data catalogs have evolved over the past decade, and continue to evolve as AI reshapes enterprise data management.

If you’re evaluating data catalog solutions or experiencing frustrations with your current tooling, understanding these generational differences matters more than comparing feature lists. The catalog that worked for your organization a couple of years ago may not support where you need to go next.

Diving deeper: The three generations of data catalogs

The data catalog market has evolved through distinct generations, each designed for fundamentally different assumptions about how organizations manage data. Understanding these generations determines whether your current tooling can support where your organization needs to go.

Gen 1: No system (spreadsheets and institutional knowledge)

Most organizations start here: Data assets tracked in spreadsheets, Confluence pages, or not at all. Discovery happens through Slack messages and “ask Sarah, she knows where that lives.” And it makes perfect sense why you’d start here: You have something like 10 data sources and a small team that talks daily.

But this approach breaks immediately at any real scale. Knowledge walks out the door when people leave. Onboarding takes months because there’s no documentation to consult. Compliance audits become archaeological expeditions through chat histories and abandoned wikis.

Many organizations still operate here, even ones with sophisticated data infrastructure in other respects. The pain is real but diffuse: Death by a thousand Slack pings rather than a single catastrophic failure.

Gen 2: Static catalogs (the portal era)

Examples: Alation, Collibra, Informatica, and AWS Glue Data Catalog

Gen 2 catalogs emerged around 2015 to solve the chaos of Gen 1. The core need they serve: A human-centric portal for searching and documenting data assets. And the Gen 2 catalog does a lot really well, including:

- Creates a searchable inventory of data assets

- Provides a place to document datasets with descriptions, owners, and tags

- Supports basic compliance through manual classification

- Enables keyword search across table and column names

These capabilities were genuinely valuable when they emerged. For organizations graduating from spreadsheets, Gen 2 catalogs were a significant improvement.

Gen 2 assumptions that no longer hold

However, Gen 2 catalogs are built on some assumptions that no longer hold in our current reality:

- Humans are the primary users: Portals first, APIs as afterthoughts. The catalog is a destination you visit, not infrastructure your systems depend on.

- Metadata changes slowly: Batch ingestion on nightly or weekly schedules is acceptable because data warehouses are updated overnight anyway.

- Manual curation is sustainable: Someone will document datasets, maintain tags, update descriptions as things change.

- Tables and columns are the scope: Tables, views, dashboards—not ML models, features, or training datasets.

“Many vendors are retrofitting AI features onto Gen 2 architectures—adding chatbots, auto-classification, inferred data descriptions. The marketing suggests parity with modern platforms. But the underlying limitations remain: metadata inevitably becomes stale, being outside of the critical path of how data practitioners do their job.”

– John Joyce, Co-Founder, DataHub

Gen 3: AI data catalogs (the current imperative)

Example: DataHub

Gen 3 represents an architectural shift, not a feature upgrade. The core design: A platform that serves humans AND machines, manages data AND AI assets, and operates at real-time speed.

Here’s what defines the modern data catalogs of Gen 3:

- Dual-audience architecture: APIs and programmatic access are first-class citizens alongside human interfaces. AI agents, automation scripts, and internal tools can query and update metadata at machine speed—not as an afterthought, but as a primary design goal.

- Unified data + AI scope: The catalog covers traditional data assets (tables, views, dashboards, pipelines) AND AI assets (ML models, features, vector databases, training datasets, LLM pipelines). A single lineage graph connects raw data through transformations to model predictions and downstream applications.

- Real-time metadata backbone: Stream-processing architecture (Kafka) instead of batch. Technical metadata, business metadata, and operational metadata (query patterns, usage metrics, pipeline health) all update in seconds, not tomorrow morning. When schemas change or incidents fire, the catalog reflects it immediately. Governance policies evaluate new assets in real-time.

- Embedded discovery: Conversational interfaces in Slack and Teams, extensions in BI tools, APIs in notebooks. Discovery happens where work happens, not in a separate portal requiring conscious effort to access.

- Automation at scale: AI-generated documentation, automated classification and tagging, metadata propagation through lineage. The system doesn’t rely on humans to maintain freshness—it populates and updates itself.

Why the Gen 2 → Gen 3 transition is happening now:

Several forcing functions are pushing organizations past what Gen 2 can support:

- Board mandates for AI in production require governed training data with documented lineage. You cannot answer “what data trained this model?” or “does this model use compliant data sources?” with a catalog that doesn’t track AI assets.

- Lean data teams can’t manually curate metadata for thousands of assets across decentralized architectures. Automation isn’t a nice-to-have; it’s the only way to maintain coverage without an army of data stewards.

- Regulatory frameworks (GDPR, CCPA, EU AI Act) require column-level visibility and audit trails that Gen 2 architectures struggle to provide at scale.

- Self-service expectations have shifted. Business stakeholders expect to find and trust data themselves, not file tickets and wait days.

Choosing a Gen 2 catalog means planning a migration in two to three years when its limitations become blocking. Choosing a Gen 3 platform built with Gen 4 in mind (like DataHub is) means the architecture grows with you. DataHub’s API-first design, real-time metadata backbone, and agent-ready interfaces aren’t just solving today’s problems—they’re positioned for where autonomous data operations are heading.

“The Gen 3 data catalog isn’t about having nicer features. It’s about having the architectural foundation required to put AI in production at enterprise scale. Organizations trying to govern AI with Gen 2 tools will hit walls that no amount of manual effort can overcome—not because those tools are bad, but because they were designed for a world that no longer exists.”

– John Joyce, Co-Founder, DataHub

See DataHub in action

Gen 4: Context platforms (the emerging horizon)

Gen 4 is where the industry is heading, though most organizations aren’t there yet. The core concept: AI agents autonomously discover, validate, govern, and manage data assets. The catalog becomes infrastructure that machines depend on for decision-making, not just a tool humans consult occasionally.

Early signals are visible at companies like Block and Apple, where DataHub provides context to automated systems making thousands of decisions per second. Natural language interfaces using emerging standards like Model Context Protocol (MCP) enable AI assistants to query metadata conversationally. Agent-ready APIs support autonomous workflows that validate compliance, check quality, and record actions without human intervention.

Why traditional (Gen 2) data catalogs break down

If you’ve been nodding along to the Gen 2 limitations above, you’re not alone and you may already be feeling some of this pain firsthand. Let’s break down exactly what goes wrong when organizations stay stuck at Gen 2, and why these aren’t edge cases but predictable failure modes.

1. Built for humans, not machines

Gen 2 catalogs were designed as portals humans visit. APIs exist, but as afterthoughts—bolted on to satisfy checkbox requirements rather than architected for serious programmatic use. This breaks when AI agents need to validate governance requirements at machine speed, when CI/CD pipelines need to check lineage before deployment, or when automation scripts need to query metadata without hitting rate limits.

The dual-audience problem isn’t solved by adding an API to a human-centric portal. It requires designing for machine consumption from the ground up.

2. Manual metadata that goes stale

Gen 2 assumes humans will document datasets: Writing descriptions, tagging business terms, noting data quality issues, explaining transformations. This breaks down immediately when documentation goes stale within weeks as pipelines change faster than teams can update catalogs.

The correlation between documentation quality and data value often inverts. High-value datasets used constantly never get documented because the experts using them are too busy. Low-value datasets get extensive documentation from teams trying to justify their existence.

3. Batch updates in a real-time world

Most traditional catalogs ingest metadata on schedules (nightly, weekly, or when someone remembers to trigger a scan). This worked when data warehouses updated overnight. It fails when Kafka topics stream continuously, when feature stores update in real-time, or when ML models retrain hourly.

By the time the catalog reflects reality, reality has changed. Data engineers troubleshoot pipeline failures with stale lineage. Governance teams enforce policies on data they don’t know exists yet.

4. No visibility into AI and ML assets

Traditional catalogs excel at one thing: Helping data analysts find tables to query. They struggle with everything AI systems need:

- Where’s the lineage connecting training datasets through feature engineering to raw events?

- Which models consumed this data, and what was their performance?

- What transformations were applied, and do they introduce bias?

These questions block AI from moving beyond pilots into production, and legacy discovery tools weren’t architected to answer them.

5. Fragmented tools, fragmented visibility

Organizations typically handle discovery, governance, and observability as separate concerns requiring separate tools: You search for data in the catalog, check quality in your observability platform, verify compliance in your governance tool. Each system maintains its own metadata, its own lineage graph, its own understanding of data relationships.

When a quality issue impacts a compliance-critical dashboard, no single system connects those dots. Teams waste hours manually tracing dependencies across fragmented tools—and still miss the full picture.

These failures matter because data catalogs have become strategic infrastructure

Data catalogs were once productivity tools. They were considered nice to have for data discovery, but not mission-critical. That’s changed.

Several converging forces have transformed catalogs into strategic infrastructure that enables or constrains an organization’s AI ambitions.

- AI at scale requires validated, governed training data: Before models reach production, data teams must answer questions that traditional discovery tools weren’t built to handle:

- What data trained this model?

- Which version of the customer dataset fed v2.3 of our recommendation engine?

- Does this model use compliant data sources?

- If we retrain with updated data, can we reproduce the original results?

These aren’t optional questions for enterprises scaling AI beyond pilots.

- Regulatory frameworks demand column-level visibility: GDPR, CCPA, and emerging AI regulations require precise visibility into where sensitive data lives, how that data flows through transformations, and which downstream systems consume it. Column-level discovery has shifted from nice-to-have to mandatory for organizations operating in regulated industries.

- Decentralized architectures mean no single team can hold context: Modern data stacks span multiple clouds, dozens of tools, and teams that operate autonomously. The data engineer who built the pipeline left two years ago. The business context lives in a different department. No single person can remember where critical datasets live or what depends on them. The catalog becomes the institutional memory that makes decentralized architectures governable.

- Business velocity expectations have fundamentally changed: Business stakeholders expect self-service access to trusted data, not ticket queues and multi-day wait times. Data scientists expect to validate training data compliance programmatically before starting expensive jobs. Analysts expect to understand data quality and freshness before building dashboards that reach executives. Traditional approaches that require hunting through documentation or pinging internal subject matter experts don’t meet these velocity requirements.

What the modern data catalog platforms actually deliver

Gen 3 AI data catalogs like DataHub address these failure modes through architectural choices that enable fundamentally different capabilities. Here’s what these data catalogs provide in practice:

A single pane of glass for data and AI assets

Modern catalogs unify traditional data assets (tables, views, dashboards, pipelines, dbt models) with AI assets (ML models, features, vector databases, notebooks, LLM pipelines) in one platform. This doesn’t just broaden coverage, it brings a consistent metadata schema, unified lineage graph, and shared data governance model across both.

Critically, this includes data versioning for ML pipelines. The catalog tracks which snapshot or version of training data fed each model version, which is essential for reproducibility, debugging, and compliance. When regulators ask “what data trained this model?” or when performance degrades and teams need to understand what changed, the answer is documented automatically rather than reconstructed through forensic investigation.

The business impact: You can trace lineage from raw data through transformations to model training to predictions to downstream applications. When a source table changes, you see which models are affected. When a model underperforms, you can trace back through data lineage to understand whether data drift or model changes are responsible.

Without this unification, organizations manage data and AI in separate tools with separate lineage graphs that don’t connect. Governance gaps emerge at the boundaries. Compliance risks multiply as AI scales.

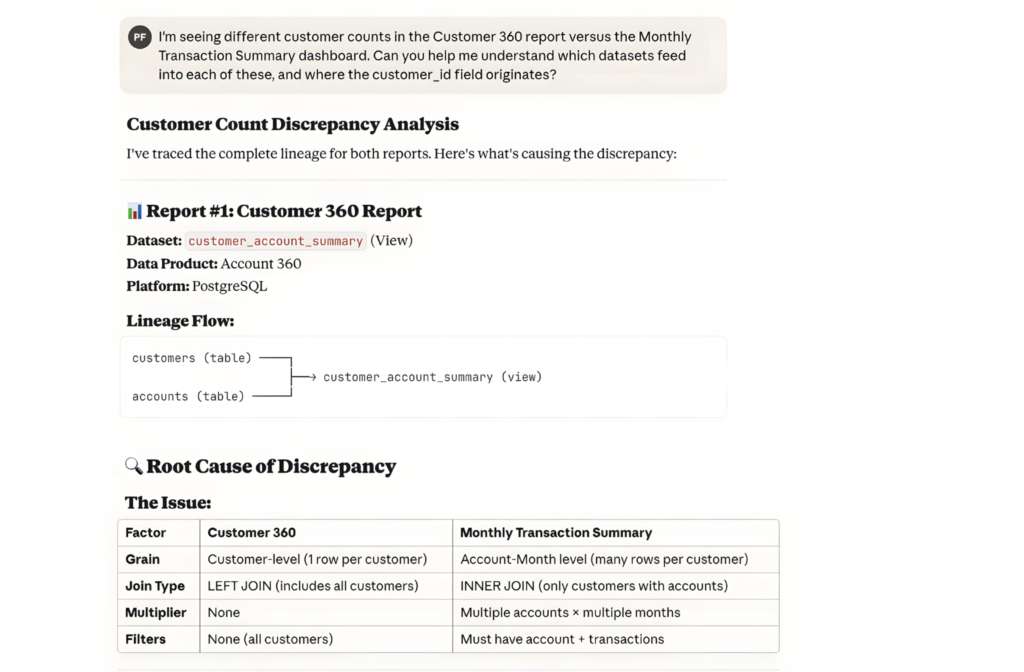

Discovery that serves humans and machines

For humans: Conversational interfaces like Ask DataHub let data users find what they’re looking for by asking questions in plain English: “Show me all tables with customer PII” or “What dashboards depend on the orders table?”—directly in Slack, Teams, or the DataHub interface. No need to learn catalog-specific syntax or remember exact table names.

“We added Ask DataHub in our data support workflow and it has immediately lowered the friction to getting answers from our data. People ask more questions, learn more on their own, and jump in to help each other. It’s become a driver of adoption and collaboration.”

– Connell Donaghy, Senior Software Engineer, Chime

For machines: GraphQL and REST APIs enable programmatic access at machine speed. Automation scripts query lineage before deploying changes. CI/CD pipelines validate data contracts. AI agents discover datasets, check compliance, and record their actions—all through APIs designed for machine consumption, not human-centric portals with APIs bolted on.

Column-level lineage across the full stack

Table-level lineage tells you that Table A feeds Table B. Column-level lineage tells you exactly which fields flow through which transformations—critical for compliance (which columns contain PII and where do they flow?) and impact analysis (what breaks if I change this specific field?).

Modern catalogs trace lineage across the entire data supply chain: from Kafka events through Spark transformations to Snowflake tables to Looker dashboards to SageMaker models. Real-time updates mean lineage reflects current state, not yesterday’s batch run.

Chime‘s software engineer, Sherin Thomas, describes lineage as their favorite DataHub capability:

“This is one really easy way of connecting the producers to the consumers. Now the producers know who is using their data. Consumers know where the data is coming from. And it is easier to have accountability mechanisms.”

Observability and governance unified with discovery

In Gen 2 architectures, discovery tells you where data lives. Observability tells you whether it’s healthy. Governance tells you whether it’s compliant. Three separate tools, three separate contexts to maintain.

Gen 3 unifies these concerns. Users don’t just find data, they see real-time quality metrics, freshness SLAs, and incident history in the same view. Governance policies apply automatically based on detected sensitivity. Quality improvements align with usage patterns because the system knows which datasets matter most.

This is the “iPhone moment” for data catalogs: The recognition that discovery, observability, and governance are more valuable together than as separate tools, just as a smartphone unifies phone, camera, and navigation into something greater than the sum of parts.

Automation that scales with your data estate

Manual metadata management doesn’t scale when you’re cataloging thousands of assets across hundreds of systems. Modern catalogs automate what Gen 2 expected humans to do:

- 100+ pre-built integrations with platforms like Snowflake, Databricks, BigQuery, dbt, Airflow, Tableau, Looker, Kafka, and SageMaker—metadata extraction happens automatically without custom development.

- AI-generated documentation analyzes transformation logic, data lineage relationships, and metadata to generate descriptions that would take hours to write manually.

- Automated classification and tagging detects sensitive data types (PII, financial information, health records) without manual review of every column.

- Metadata propagation via lineage means you document once at the source; downstream datasets inherit context automatically.

HashiCorp reduced ad hoc data inquiries from dozens daily to at most one per day through centralized documentation and automated metadata management. The catalog maintains itself rather than depending on humans who have more pressing work.

Enterprise security and access control

As catalogs become mission-critical infrastructure managing sensitive metadata at scale, security requirements intensify.

Modern platforms provide:

- Fine-grained access control (RBAC and ABAC) at table, column, or row level—a marketing analyst might discover aggregated segments while being blocked from individual PII.

- SSO integration with Okta, Azure AD, and Google Workspace.

- Domain-based access for decentralized organizations where the marketing team sees only marketing datasets.

- Full audit logs tracking who viewed or edited what and when—essential for SOC 2, GDPR, and regulatory compliance.

- Secure metadata ingestion through remote executors and credential isolation, ensuring metadata collection doesn’t create new security vulnerabilities.

See DataHub in action

How to evaluate AI data catalog solutions

Not every organization needs enterprise-grade catalog infrastructure immediately. Open-source tools and lighter-weight solutions serve early-stage companies and smaller teams effectively.

Evaluation checklist

| Evaluation criteria | Why it matters | Questions to ask |

| Real-time vs. batch metadata processing | Batch scans create gaps where metadata lags reality, breaking automated workflows and governance | How quickly do metadata changes appear in the catalog? Hours? Minutes? Seconds? |

| Column-level vs. table-level lineage | Compliance (GDPR, CCPA) requires knowing exactly which columns contain PII, not approximations | Can you trace a specific field through all transformations to all downstream consumers? |

| Unified vs. fragmented architecture | Separate tools for discovery, data governance, and observability create gaps in visibility when issues span concerns | Is there a single metadata graph, or do you need multiple tools that don’t share context? |

| Data-only vs. data + AI coverage | Traditional catalogs handle enterprise data assets but can’t track models, features, training datasets, and their relationships | Can you see lineage from training data through features to model predictions? |

| UI-only vs. API-first architecture | Platforms designed for human UIs can’t support autonomous agents and automated validation | Were APIs designed for machine consumption, or bolted on after the fact? |

| Open-source vs. proprietary foundation | Vendor lock-in creates migration risk when needs diverge from roadmap | Is there an open-source core that ensures platform evolution aligns with industry standards? |

| Integration ecosystem | Limited connectors mean manual work to catalog critical systems | How many pre-built connectors exist? Who maintains them? What’s the process for new integrations? |

Ready to see what a Gen 3 data catalog can do?

As we’ve seen, the gap between Gen 2 and Gen 3 isn’t a feature gap, it’s an architectural one.

If your current catalog is struggling to keep pace with AI initiatives, decentralized data architectures, or the shift to self-service, it may be time to see what’s possible with infrastructure built for where data management is heading.

Check out our product demos or explore the product today to see the difference.

Future-proof your data catalog

DataHub transforms enterprise metadata management with AI-powered discovery, intelligent observability, and automated governance.

Explore DataHub Cloud

Take a self-guided product tour to see DataHub Cloud in action.

Join the DataHub open source community

Join our 14,000+ community members to collaborate with the data practitioners who are shaping the future of data and AI.

FAQs

Recommended Next Reads