Building AI Agents You’d Trust in Production:

December 2025 Town Hall Highlights

Every data analyst has lived this nightmare: three days lost to Slack threads, email chains, and access tickets. You finally get the data. The field you need doesn’t exist. Your deadline is tomorrow.

This isn’t just a tools problem. It’s a context management problem. The knowledge you need exists somewhere—in someone’s head, buried in a Confluence page, or encoded in undocumented transformations three systems back. But there’s no central place where context lives alongside data.

Modern data teams are looking to AI agents to solve these bottlenecks. But they can’t solve what humans haven’t. Without structured context—where data came from, how it was transformed, how to use it correctly—AI agents hit the same walls your analysts do. Only, the problem is compounded at AI scale. Thousands of confident, confused agents delivering wrong answers at lightning speed. At our December community town hall, we showed how we’re solving this. DataHub is becoming a context platform that gives both humans and AI agents the rich context they need to work effectively. Not just metadata about tables and columns, but the full picture—business definitions, data quality rules, transformation logic, and organizational policies that make data usable.

Building the DataHub context platform: Three big releases shipping soon

DataHub’s evolution into a context platform builds on what DataHub already does today:

- Operate as a unified AI data catalog

- Deliver capabilities for data discovery, observability, and governance

- Integrate with 100+ systems across the data stack

We’re shipping three new releases that will extend these foundations for the agent era.

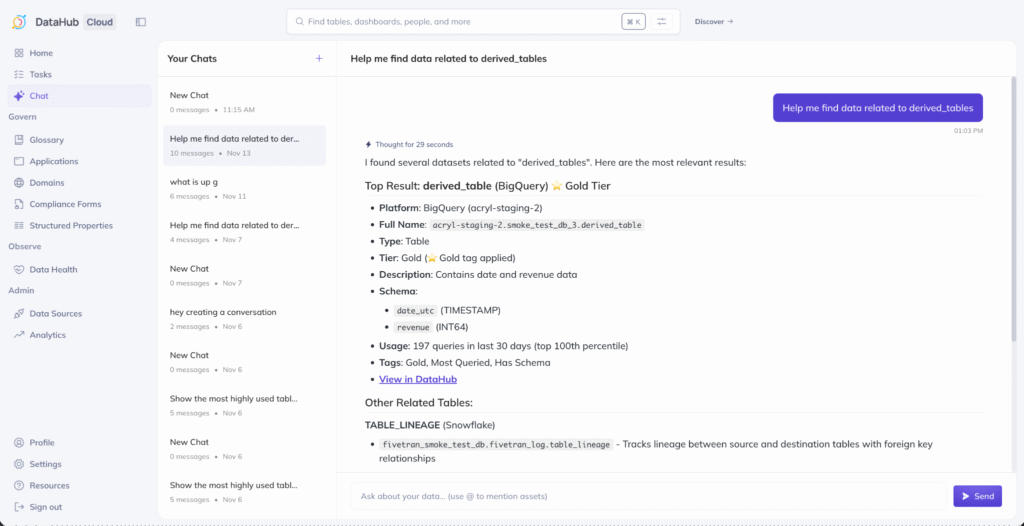

1. Ask DataHub (live in Private Beta)

Ask DataHub is a natural language agent embedded directly in DataHub and available where you work in Slack and Microsoft Teams.

Ask DataHub helps you:

- Find trustworthy data across your entire data ecosystem

- Understand the impact of changes before making them

- Assess data quality and proactively identify potential issues

- Generate accurate SQL and dbt models using DataHub’s rich context

- Manage metadata directly through conversation

When Ask DataHub lacks context, it asks clarifying questions instead of hallucinating. In a demo using fictional bank trading data, we asked it to calculate the average spread between bid and ask prices. It didn’t guess the security ID format or trading session start time. It stopped and asked.

You can customize Ask DataHub’s behavior through base instructions. Tell it to prioritize gold-tier assets, and it will factor that into every search. It’s available directly in DataHub’s search bar, in Slack, and in Teams.

Ask DataHub is live today in private beta for DataHub Cloud customers.

2. Data context graph

Traditional metadata platforms handle structured data well—tables, columns, and schemas. But agents also need access to unstructured knowledge that doesn’t live in your data warehouse:

- Notion documents defining what constitutes a “safe” loan-to-value ratio

- Confluence pages explaining your data retention policies

- Legacy knowledge of domain experts who understand the business context behind the numbers

The data context graph connects unstructured knowledge to DataHub’s data graph, making it discoverable and actionable.

You can create documents directly in DataHub or connect external sources like Notion, Confluence, and Google Drive. Then link those documents to specific data assets, creating a richer web of context that agents can navigate alongside your structured metadata.

Semantic search makes this unstructured knowledge accessible and useful. When asked a policy question like “Do all tables require retention policies?”, Ask DataHub can retrieve the answer directly from your retention policies document.

The real power shows up in query generation. In a demo for a fictional bank, Ask DataHub was prompted to generate SQL for “total trade volume on commercial real estate loan trades over the past quarter with a safe loan to value ratio.” Ask DataHub scanned multiple Notion documents defining what “safe” means for the bank (75% or less for commercial real estate), then incorporated that business knowledge directly into the generated SQL. No hallucination, no guessing—just accurate queries grounded in your organization’s actual definitions.

The context graph releases in December with DataHub v1.4.0 in both open source and Cloud, along with a new Notion connector.

3. Agent Context Kit

Building agents with DataHub’s context should be straightforward, regardless of your preferred framework. We’re releasing integrations and resources designed to meet developers where they already work:

- Framework integrations for LangChain, LangGraph, Google ADK, and CrewAI let you build agents in your preferred environment, using DataHub as your context platform for retrieval and persistence

- Open source repository of example agent recipes provides templates and starting points for common use cases

The Agent Context Kit will introduce a powerful feedback loop: agents don’t just read from the context graph, they write back to it.

For example, a LangChain agent generates a complex SQL query, then saves that query and its summary back to DataHub as a context document. Those agent memories become available to future queries, other agents, and humans exploring the catalog. The context graph grows richer with every interaction.

“Having an agent being able to access the rest of the context graph is great. But being able to persist these memories and data back into the context graph so they can be used for other agents, other queries, and future analysis is really, really powerful.”

— Nick Adams, Software Engineer, DataHub

The Agent Context Kit ships in December. Additional unstructured data source connectors arrive throughout 2026.

Also shipping in 2026

Beyond the context platform foundation, DataHub is expanding capabilities across observability, connectivity, and industry collaboration.

Snowflake’s Open Semantic Interchange (OSI)

DataHub is participating in Snowflake’s OSI initiative, a working group defining common metric definitions that work across systems. Once the standard is set, DataHub will make these standardized semantic definitions discoverable and governable across your data supply chain—eliminating the problem of metrics that mean different things in different tools.

Expanded ecosystem connectivity

Microsoft Fabric support begins with Data Factory and One Lake connectors, with additional Fabric ecosystem connectors planned based on community demand. A Monte Carlo integration rounds out observability support alongside existing Great Expectations and dbt test integrations, so your data quality checks are all in one place.

We’re also building tools that accelerate production-grade connector development. Expect a dramatically expanded integration footprint in 2026.

Enhanced observability features

Production environments run thousands of data quality checks. New capabilities make them manageable: a data health dashboard for daily triage, assertion notes and runbooks for faster resolution, and enhanced data contracts with structured properties for organization-specific enforcement details like SLA windows and redistribution policies.

The foundation that makes this possible

These 2026 capabilities build on extraordinary momentum in 2025. Our open source community submitted nearly 2,400 pull requests from 177 contributors—1.3 million lines of code that expanded DataHub’s capabilities across integrations, observability, and core platform infrastructure.

Our progress reflects everyone building alongside us. Beyond community contributions, we shipped major product releases throughout the year that advanced what’s possible with DataHub.

Major 2025 Releases

DataHub 1.0

This release marked the project’s fifth birthday with a complete UX overhaul:

- Simplified homepage with customizable layouts focused on core workflows

- Reimagined search with quick filters and logical grouping by platform, schema, or database

- Improved lineage exploration at the asset and column level for complex production graphs

- Data observability front and center with data quality results and data quality check history surfaced directly at the asset level

DataHub MCP Server

Our MCP Server launched earlier this year and saw rapid adoption. By providing metadata context to LLMs, it enables powerful impact analysis and agent development.

Teams report using it not just for data work but for day-to-day software engineering and incident management. Block uses it in combination with their AI agent Goose to accelerate incident response from days to minutes with a few short, conversational messages.

New metadata ingestion sources

New metadata ingestion sources and performance tuning on popular sources ensures DataHub handles high-volume environments without bottlenecks. New connectors included:

Additional sources are in development from community and core team contributions, including Azure Data Factory, Confluent Cloud, and Flink.

Iceberg REST catalog integration

The Iceberg REST catalog integration solved a common pain point for data lakehouse implementations: scattered access policies that must be configured individually across systems. Manually configuring policies in multiple places creates fragility, policy drift, and operational burden. With this integration, you define your access policies once in DataHub, and the Iceberg REST API enforces it everywhere.

Demandbase processes a petabyte of data with centralized access policy management through DataHub’s Iceberg REST catalog integration. The integration delivers automated lineage tracking as tables are read and written, eliminating manual instrumentation.

Start building with context

The path from fragmented data chaos to agentic data use runs through context. DataHub is evolving into the platform that curates context from structured data and unstructured knowledge, giving both humans and agents the complete picture they need to work effectively.

Watch the full town hall recording for a deeper dive.

Ready to explore how context changes what’s possible with your data? Join the conversation alongside 14,000+ data professionals in our community. Or, take a self-guided product tour to see DataHub in action.

Recommended Next Reads