Data Lineage:

What It Is and Why It Matters

Data Lineage:

What It Is and Why It Matters

Imagine you are working in a company with an advanced data ecosystem. Suddenly, you receive a message: “Our dashboard is broken; the numbers aren’t adding up.”

In many cases, understanding data lineage is critical to identifying broken pipelines of data issues. In this blog post, we will explore the concept of data lineage, its importance, and some fundamental approaches to establishing data lineage within your data ecosystem.

What is Data Lineage?

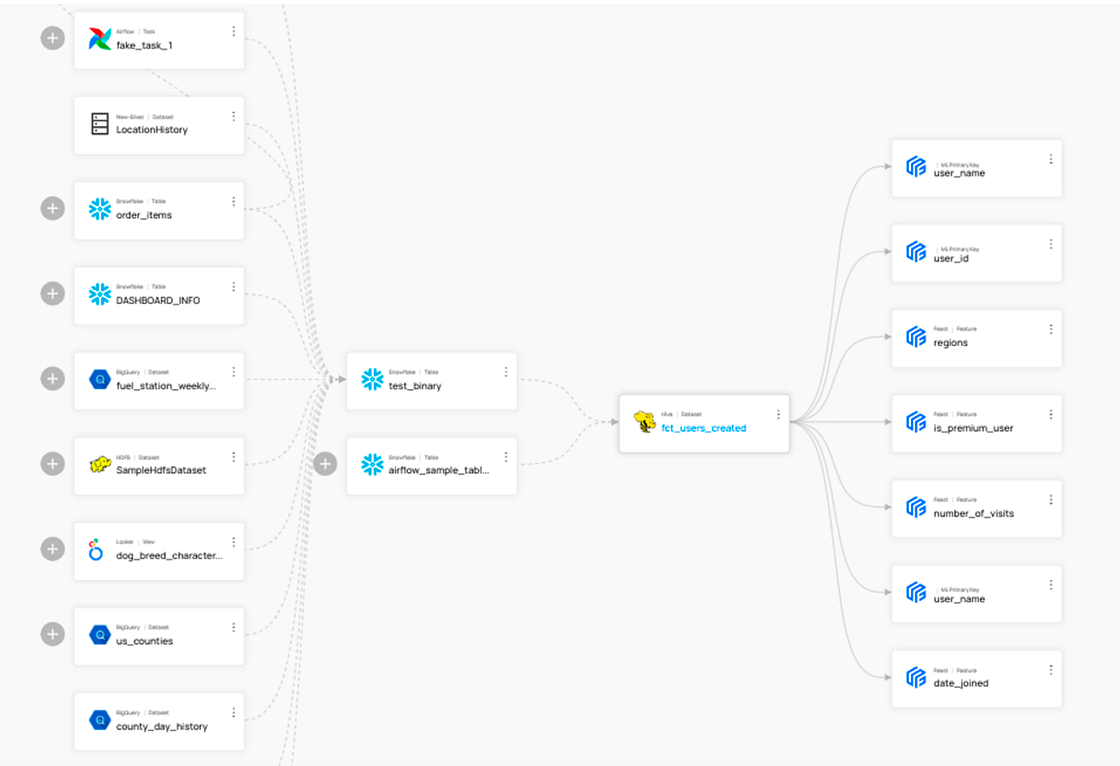

Data lineage is a map that shows how data flows through your organization. It details where your data originates, how it travels, and where it ultimately ends up. This can happen within a single system (like data moving between Snowflake tables) or across various platforms. Here’s what it might look like:

In this process, data flows from Airflow/BigQuery to Snowflake tables, then to the Hive dataset, and ultimately to the features of Machine Learning Models.

For teams building data pipelines, a practical option is to use a data lineage explorer to visualize how data moves in context.

Key Terminologies in Data Lineage

To better understand data lineage, it’s important to familiarize yourself with a few key terms:

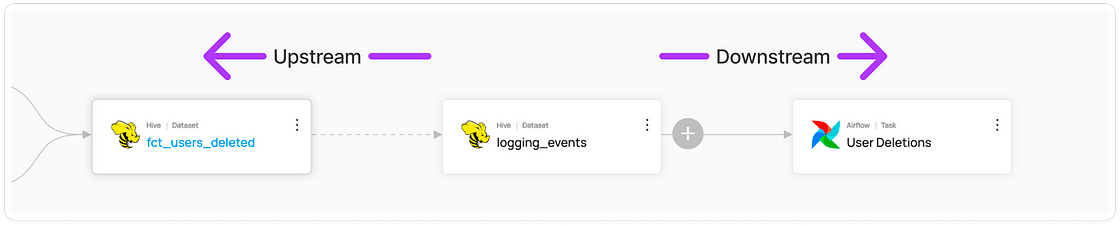

Upstream and Downstream

These terms describe the direction of data flow. “Upstream” refers to the source or origins of the data, while “Downstream” describes where the data is sent or used.

Keep in mind that the concepts of upstream and downstream are context-dependent. For instance, when looking at the logging_events Hive dataset, the fct_users_deleted dataset is upstream, while the User Deletions Airflow task is downstream.

However, in the scenario where we focus on the User Deletions task, both logging_events and fct_users_deleted are viewed as upstream entities. This illustrates how data relationships can shift depending on the specific data flow or processing task being considered.

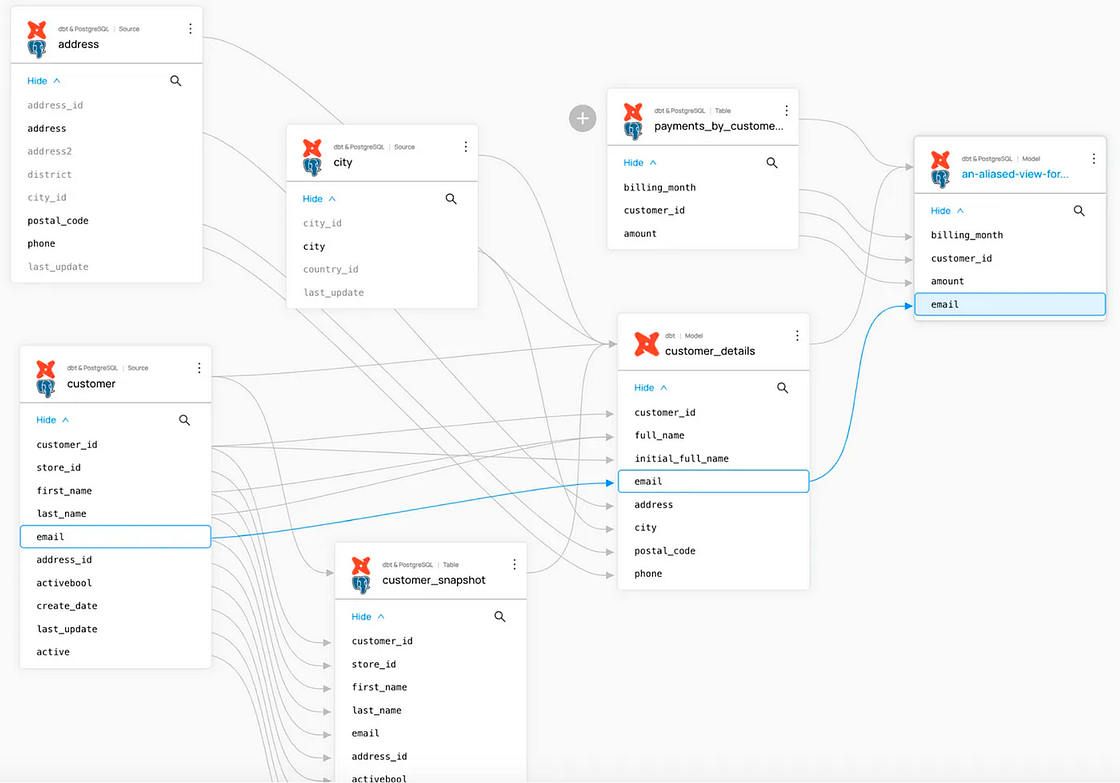

Column-level Lineage

Column-level lineage tracks changes and movements for each specific data column. This approach is often contrasted with table-level lineage, which specifies lineage at the table level.

Below is how column-level lineage can be set with dbt and Postgres tables.

To truly understand how specific fields evolve as they move through your data pipelines, it helps to explore column-level lineage, which reveals transformations at the most granular level and enhances your ability to debug issues and ensure regulatory compliance.

SQL Parsing

For teams running SQL-heavy pipelines, understanding SQL lineage can reveal how individual queries influence downstream analytics. Although not a requirement for detecting lineage, SQL parsing is frequently used to analyze and establish lineage by dissecting SQL queries to map out data flows. This is a powerful approach to automatically detecting interdependencies between datasets in SQL databases (i.e. Redshift, Postgres, etc.), transformation tools (ie. dbt), and/or reporting tools (i.e. PowerBI, Looker) that leverage SQL to transform datasets, but may not have out-of-the-box support for column-level lineage.

Why is Lineage Important?

In today’s complex data environments, many tools and transformations contribute to a single piece of data or dashboard. It’s crucial to understand data lineage for several reasons:

Maintaining Data Integrity

Data lineage offers transparency regarding data origins and transformations. It empowers data teams to detect anomalies, validate data sources, and rectify inconsistencies swiftly. This procedure ensures that data utilized in crucial business operations is clean, thoroughly documented, and current. Consequently, it prevents errors and enhances overall data quality.

Simplify and Refine Complex Relationships

The easier it gets to store and transform datasets, the easier it becomes to prioritize speed of delivery over methodical data modeling. We’ve all been there: your main stakeholder has a critical data request and needs it right this very second. Since you don’t have the luxury of time on your side, you resort to building a one-off ETL job, Chart, Dashboard, or whatever they might need. These resources quickly pile up and it can be difficult to know how they all fit together. Lineage graphs provide a visual representation of what otherwise can feel like spaghetti code, making it much easier to identify areas of high complexity, redundancy, and more.

Lineage Impact Analysis

Have you ever encountered a problem in a crucial data table? If a particular table has issues and it’s linked to other data areas, the effects can ripple outward. Robust lineage tools can help you visualize these connections clearly, allowing you to notify the appropriate parties and resolve issues efficiently.

Propagate Metadata Across Lineage

Effective metadata management and data governance require you to tag data and monitor ownership throughout your data ecosystem. While this can be a daunting task, propagating (aka copying) metadata across lineage relationships can greatly increase the coverage and accuracy of tagging, ownership assignment, and more.

Sourcing and Managing Data Lineage

Data Lineage within a Single System or Tool

It’s becoming more and more common for data warehouses and ETL tools to generate data lineage, making it easier than ever to understand the interdependencies of resources within its system. For example, BigQuery and Snowflake have system tables that you can easily query to understand how datasets depend on one another. dbt supports declaring lineage relationships within model definitions, making it very easy to visualize and manage your various dbt model dependencies.

However, these resources do not support cross-system lineage. For instance, it can be difficult to understand the full dependency chain of Snowflake datasets that may or may not be transformed using dbt; nor will it provide visibility into how data is reported in downstream BI Tools. Therefore, relying solely on these native solutions can be limiting if your data ecosystem is complex.

Data Lineage Across Multiple Systems or Tools

The most scalable solution for sourcing and managing lineage across systems is to integrate your data stack with a data catalog, specifically one that offers robust support for automatic detection of table- and column-level lineage. If your data stack includes bespoke solutions or legacy systems, it’s likely that lineage information will not be readily available. In this case, it’s critical to choose a data catalog that supports custom integrations and has easy-to-use tools to programmatically assign and manage lineage to ensure high coverage and accuracy of cross-system dependencies.

For teams integrating data lineage into ML workflows, the concept of data lineage machine learning can help ensure more reliable model outputs.

Simplified Data Lineage with DataHub

DataHub, the #1 open-source metadata platform, supports automatic table- and column-level lineage detection from BigQuery, Snowflake, dbt, Looker, PowerBI, and 100+ other modern data tools. For data tools with limited native lineage tracking, DataHub’s SQL Parser detects lineage with 97–99% accuracy, ensuring teams will have high-quality lineage graphs across all corners of their data stack.

Even more, DataHub has robust support for defining and managing programmatically via YAML and/or API, as well as manual curation via the DataHub UI. Check out the DataHub Feature Overview to learn more!

Recommended Next Reads