MCP Server 101:

Enabling Context-Rich AI Agents with DataHub

With the DataHub MCP Server, agents don’t just generate answers. They apply critical context to make the right decisions about which data to use and how.

Everyone’s excited about AI. Teams are racing to integrate models like Gemini 2.5, GPT-4o, and Claude into their analytics stack. But the results aren’t always what we hoped for.

We’ve all been burned by vibe-coded queries that hit the wrong tables, misinterpret business logic, or completely miss the mark. Why? Because these models lack one critical ingredient: context.

This post breaks down why context is critical for AI agents, what an MCP server is, and how the DataHub MCP Server enables agents to discover, understand, and act on enterprise data with the right tools and context.

Why AI agents fail without metadata context

Tools like Snowflake Cortex and Databricks Genie make querying data feel effortless. Just type a question and watch the magic happen.

But the magic fails without organizational context.

Ask, “What’s our mobile app conversion rate?” and you might get a confident—but entirely wrong—answer.

In one test we ran in our June Town Hall, Genie confidently responded that the mobile app conversion rate was 200%. A number that’s not just wrong, but impossible.

Why does this happen?

Because the agent doesn’t understand how your business defines that metric.

Today’s agents can analyze data, but they don’t know:

- Which tables are trustworthy

- How key metrics are defined

- What governance rules apply

- How to best query a table

If you hired a new data analyst and dropped them into your stack without any onboarding—no metric definitions, no visibility into which dashboards are trusted, no tribal knowledge—they’d likely fail too. That’s exactly what we’re doing to LLMs when we hand them a text-to-SQL problem and expect them to figure it out.

Like human analysts, AI agents need context to succeed.

That’s why context engineering is emerging as a critical practice: the work of embedding domain-specific knowledge, like trusted tables, definitions, and query patterns, into the systems that support AI agents.

MCP servers help bring this context into AI workflows in a standardized way.

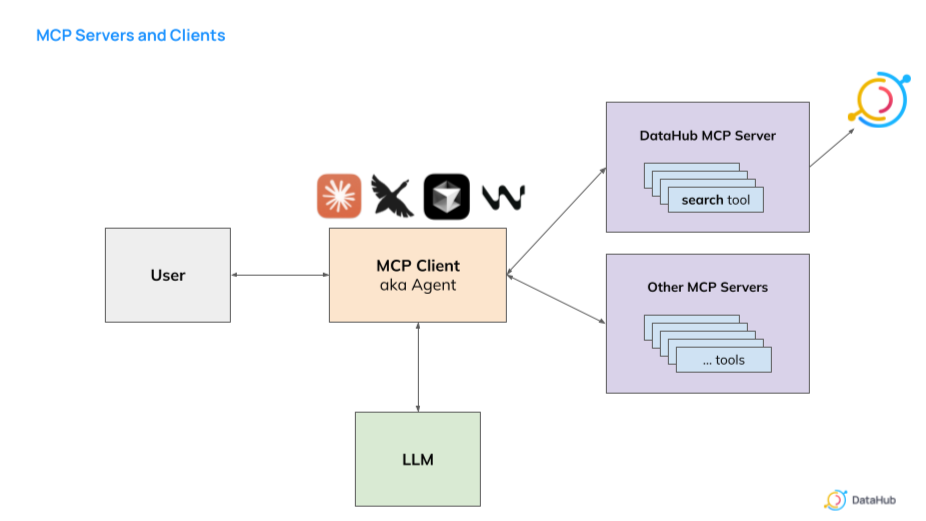

How MCP servers power AI agent tool use

To understand why MCP servers matter, let’s recap how modern AI agents function.

At its core, an AI agent combines an LLM with a set of tools it can call to retrieve information, perform calculations, or take action. For teams looking to operationalize this approach, a data agent MCP server provides a standardized way to ground AI actions in trusted enterprise data.

How agents use tools

Let’s look at a simple example from outside the data world to illustrate how agent tool use works.

Imagine you ask an AI chatbot, “Will it rain tomorrow?” The model doesn’t guess, it orchestrates a tool calling process that might look something like this:

- User query: The question gets passed to the agent.

- Agent gathers definitions of tool inputs and outputs: The agent has access to message history and tools like

get_user_locationandget_weather. It passes the user query and the tool definitions to the LLM. - LLM decides it needs more information: The LLM looks at the available tools and the query, then decides it needs more information. It returns a request for the agent to call the tool

get_user_location. - Agent executes the requested tool call: The agent calls

get_user_location, gets back"San Francisco, CA", and adds that result to the message history. - Repeat steps 3–4 until the LLM has enough information: The LLM reviews the updated message history and requests the agent to call the

get_weathertool again with the location, San Francisco. The agent callsget_weatherwith the input [“San Francisco, CA”] and gets the result back temp:“60 F”, rain: 0. …Then, the agent adds that output to the message history. - LLM decision: The LLM decides it has enough information to answer the question and will return a natural language response:

“No, it will not rain tomorrow”to the agent. - Final response: The agent passes the final response back to the user.

This kind of back-and-forth tool orchestration is powerful, but there’s a catch.

Most agents today rely on custom, hard-coded tools. If you want an agent to talk to your internal metadata service, your BI dashboard, or your data catalog, you have to build the integration.

And let’s be honest: are you really going to implement tool wrappers for every app in your stack?

That’s where MCP servers come in.

What’s an MCP server? Tool standardization for AI agents

To act as effective orchestrators, AI agents need to know:

- What tools are available

- How to execute each tool

That’s why MCP servers are important, even when we already have APIs. An MCP server acts as an LLM-oriented curation layer and documentation layer on top of those APIs.

You can think of an MCP server as both a tool registry and a tool executor for your AI agent.

Note: The MCP specification includes more than just tool definitions and execution. It also supports concepts like resources and prompt templates. However, these parts of the spec are less widely adopted today compared to the core tool calling functionality.

How the DataHub MCP Server Works

Let’s walk through how the DataHub MCP Server works with a concrete example. Imagine asking an AI agent, “What are the right tables to analyze customer lifetime value?”

Before, that would mean opening DataHub, manually browsing datasets, checking for documentation, trust signals, and table owners. Then, piecing it all together yourself.

Now, with DataHub’s MCP Server, an agent like Claude Desktop can do that discovery work for you—automatically and reliably.

Here’s how it works under the hood:

- The agent connects to DataHub’s MCP Server, which exposes a set of tools like search, lineage traversal, and metadata lookup.

- The agent receives a list of these DataHub-specific tools, along with their input/output definitions, via the MCP protocol.

- The LLM interprets the user query (“What are the right tables for customer lifetime value?”), decides which tool to call (e.g., the search tool) and passes the relevant arguments to the agent.

- The agent calls that tool via the DataHub MCP Server, requesting a metadata search scoped to trusted CLV-related assets.

- The DataHub MCP Server handles execution and returns the result, which may include column-level lineage, ownership, documentation, tags, and quality signals.

- The agent passes that information back to the LLM, which synthesizes a clear natural-language summary.

This is what turns an LLM from a generic chatbot into a domain-aware data assistant, powered by the metadata your team already curates in DataHub.

Explore our detailed overview blog for a closer look at the DataHub MCP Server, complete with use cases and examples.

Watch this customer lifetime value example in action in the following short demo.

As data teams explore context-rich AI workflows, this AI agents use case from Block explores how the MCP Server can empower AI agents to reason over enterprise data with proper context.

With the DataHub MCP Server, agents don’t just generate answers. They apply critical context to make the right decisions about which data to use and how.

The future of AI agents depends on metadata context

With DataHub’s MCP Server, AI agents can navigate complex metadata, understand organizational logic, and deliver insights that were once locked behind manual workflows.

This isn’t just an integration. It’s infrastructure for the future of intelligent data systems.

How to get started with the DataHub MCP Server

Ready to bring intelligent context to your AI stack?

Explore the DataHub MCP Server

Visit DataHub’s documentation to begin your implementation today.

Join our open source community

Collaborate with 13,000+ members building the next generation of AI-native data systems. Join our community Slack to get started.

About the authors

Harshal Sheth is a Founding Engineer at DataHub. A 2022 Yale Computer Science graduate, he has gained diverse technical experience through internships at Google, Citadel Securities, Instabase, and 8VC. At Google, he developed tracing tools for Fuchsia OS, while at Citadel Securities, he worked on low-latency technologies. His background spans distributed systems, venture capital evaluation, and scalable infrastructure development.

Gabe Lyons is a Founding Engineer at DataHub. Before joining DataHub in 2021, Gabe was a Software Engineer at Airbnb, where he led several initiatives building tooling for Data Quality and Data Discovery. Previously, he created several popular Flash games as a freelance developer. Gabe holds a Computer Science degree from Brown University and brings practical expertise in building scalable data systems that power modern metadata management solutions.

Recommended Next Reads