The AI Stack — and the evolution of the Metadata Platform

When I wrote about the evolution of metadata architectures in 2020, I focused primarily on the needs for metadata platforms as driven by traditional data ecosystems and data engineering workflows. Since then, the rapid advancement of AI and its integration into various aspects of business operations have brought new challenges and requirements for metadata management, necessitating an update to the blog post and some new predictions about the future!

In this post, I’ll explore how the AI stack is influencing the evolution of metadata platforms and how a well-architected system can serve both data and AI requirements effectively. This evolution, I propose, marks the emergence of a fourth generation of metadata platforms.

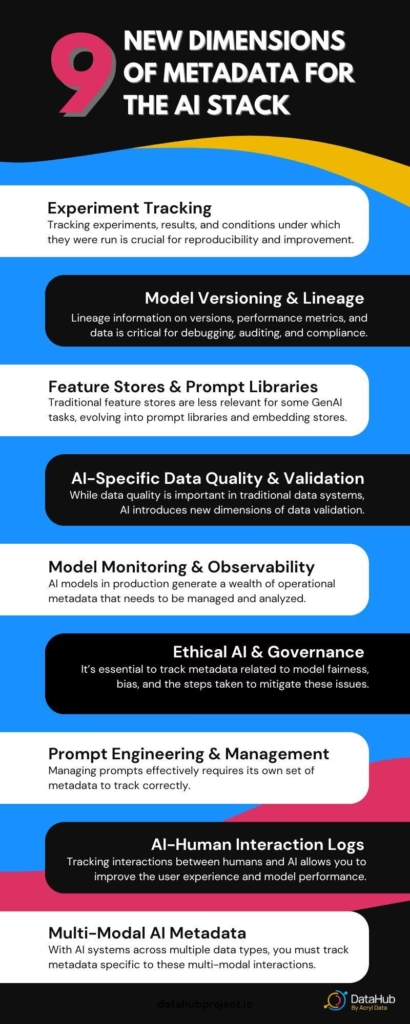

The AI Stack: New Dimensions of Metadata

As organizations extend metadata platforms to support AI-driven workflows, organizations increasingly rely on AI-ready data to ensure reproducibility and trustworthy model outcomes.

The AI stack introduces several new dimensions to the metadata landscape, each bringing its own set of challenges and opportunities as identified in recent research[8].

I like to break this down into nine different categories:

1. Experiment Tracking

Unlike traditional data pipelines, AI workflows involve extensive experimentation. Data scientists run numerous iterations with different hyperparameters, model architectures, and datasets. Tracking these experiments, their results, and the conditions under which they were run is crucial for reproducibility and improvement.

In practice, this means capturing metadata about each experiment run, including:

- Input datasets and their versions

- Model architecture and hyper-parameters

- Training environment details (hardware, software versions)

- Performance metrics and evaluation results

- Output artifacts (model weights, checkpoints)

For GenAI models like LLMs, this also includes:

- Pre-training and fine-tuning datasets

- Tokenization and vocabulary details

- Training compute resources and time

This level of detail enables data scientists to reproduce results, compare different approaches, and build upon previous work effectively.

2. Model Versioning and Lineage

As models evolve, it’s essential to maintain a clear record of different versions, their performance metrics, and the data they were trained on. This lineage information is critical for debugging, auditing, and compliance purposes.

Model versioning goes beyond simple version numbers. It involves tracking:

- The complete provenance of a model, from raw data to deployed artifact

- Changes in model architecture or training process between versions

- Performance differences across versions

- Approval and deployment status of each version

For LLMs, additional versioning metadata includes:

- Base model and any adaptations (e.g., fine-tuning, prompt engineering)

- Versioning of prompts used for specific tasks

This detailed versioning enables teams to roll back to previous versions if issues arise, understand the impact of changes, and maintain a clear audit trail for regulatory compliance.

3. Feature Stores and Prompt Libraries

While traditional feature stores may become less relevant for some GenAI tasks, they evolve into prompt libraries and embedding stores:

- Prompt templates and their versions

- Embedding models and their versions

- Usage tracking of prompts across different applications

- Performance metrics of different prompts for specific tasks

4. AI-Specific Data Quality and Validation

While data quality is important in traditional data systems, AI introduces new dimensions of data validation. This includes checking for data drift, model drift, and ensuring the statistical properties of training data match those of production data.

AI-specific data quality metadata might include:

- Distribution statistics of input features over time

- Detected anomalies or outliers in input data

- Drift metrics between training and production data distributions

- Model performance metrics on different data slices

For GenAI:

- Prompt quality metrics (e.g., clarity, task completion rate)

- Output coherence and relevance metrics

- Toxicity and safety checks on generated content

Tracking this metadata allows teams to proactively identify and address issues that could impact model performance in production.

5. Model Monitoring and Observability

AI models in production require continuous monitoring of their performance, input distributions, and output quality. This generates a wealth of operational metadata that needs to be managed and analyzed.

Observability metadata for AI models includes:

- Real-time performance metrics

- Input data statistics and detected anomalies

- Prediction latency and throughput metrics

- Resource utilization (CPU, memory, GPU)

- Alerts and incidents related to model performance

For LLMs and GenAI:

- Token usage and costs

- Prompt-response pairs for analysis

- Generation diversity metrics

- Safety and content policy compliance metrics

This operational metadata is crucial for maintaining the health and performance of AI systems in production environments.

6. Ethical AI and Governance

With increasing focus on responsible AI, there’s a need to track metadata related to model fairness, bias, and the steps taken to mitigate these issues.

Ethical AI metadata might include:

- Fairness metrics across different demographic groups

- Documentation of bias mitigation techniques applied

- Results of ethical reviews or audits

- Usage restrictions or guidelines for models

- Stakeholder sign-offs and approvals

For GenAI this expands to:

- Copyright and attribution tracking for generated content

- Transparency about AI-generated content

- Safeguards against misuse (e.g., deep fakes, misinformation)

- Model cards detailing capabilities, limitations, and intended uses

This metadata is essential for ensuring AI systems are deployed responsibly and in compliance with ethical guidelines and regulations.

7. Prompt Engineering and Management

Prompt engineering has become a crucial aspect of working with large language models and other generative AI systems. Managing prompts effectively requires its own set of metadata to track performance, iterations, and use cases.

A new category specific to GenAI:

- Prompt version control and A/B testing results

- Prompt performance metrics for different tasks

- Metadata about prompt chaining or few-shot learning examples

- Integration points between prompts and traditional code

This metadata helps teams optimize their use of language models, track the evolution of prompts, and understand which prompts work best for specific tasks or user groups.

8. AI-Human Interaction Logs

As AI systems become more interactive, especially in conversational interfaces, tracking the interactions between humans and AI becomes crucial for improving user experience and model performance.

Another new category for interactive AI systems:

- Conversation histories and user feedback

- User satisfaction metrics and improvement suggestions

- Metadata about when and why human intervention was needed

This interaction data helps in fine-tuning models, improving response quality, and understanding user needs and pain points in AI-driven interactions.

9. Multi-Modal AI Metadata

With the increasing prevalence of AI systems that work across multiple data types (e.g., text, images, audio), there’s a need to track metadata specific to these multimodal interactions.

For AI systems working with multiple types of data:

- Cross-modal alignment information (e.g., image-text pairs)

- Modality-specific preprocessing steps and parameters

- Performance metrics across different modalities

This metadata is crucial for ensuring coherence across different data types, optimizing multi-modal model performance, and understanding how different modalities interact within the AI system.

As we explore how metadata supports AI workflows, Decoding AI and Metadata sheds light on the role of metadata.

Evolving the Metadata Platform for AI: The Fourth Generation

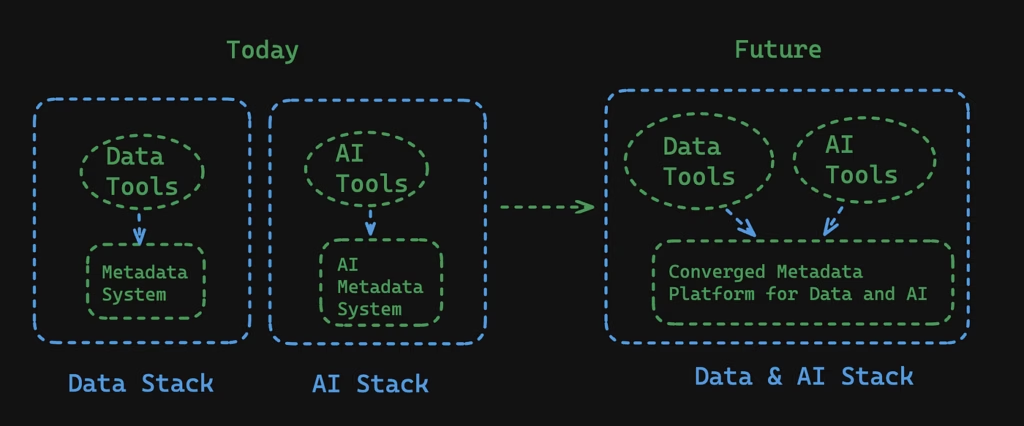

Will metadata platforms for AI continue to be purpose-built for AI use-cases? I don’t think so.

Over the next few years, we will see a convergence of AI and data metadata platforms and organizations will start requiring a single metadata system (codenamed The Metadata System or TMS for the rest of this blog for brevity) to be the system of record for all AI and data metadata. This convergence of AI and data metadata systems is not a novel concept, but rather an expected evolution as AI development matures.

This pattern of convergence has been observed in other industries as they’ve grown and integrated new technologies. In the telecommunications sector, we saw a significant shift from separate networks for voice, data, and video to unified, converged networks that efficiently handle all types of traffic[1]. This integration enabled new services, improved efficiency, and enhanced the overall customer experience[2]. Similarly, the banking industry underwent a transformation when it moved from siloed systems for different products (savings, loans, investments) to integrated core banking systems[3]. This unification provided a holistic view of customer relationships, streamlined operations, and enabled more sophisticated financial products and services[4]. In both cases, the convergence was driven by the need for greater efficiency, improved customer service, and the ability to innovate more rapidly[5].

I expect similar drivers to push for unification of AI and data metadata systems. As AI transitions from an emerging technology to a core business function, organizations are recognizing that keeping AI metadata separate creates unnecessary complexity and hinders the potential for synergy with existing data systems[6]. Just as telecom and banking leaders realized the limitations of fragmented systems, forward-thinking organizations are now understanding that a unified metadata platform is crucial for maximizing the value of both their data and AI initiatives[7]. We are already seeing the early trends of this happening in data with both Databricks and Snowflake positioning themselves closer to an “integrated Data and AI” stack for the enterprise.

To effectively serve both traditional data and AI workflows, a metadata platform needs to evolve beyond the third generation I described previously. This evolution represents a fourth generation of metadata platforms.

Let’s explore the key characteristics of this new generation:

Flexible and Extensible Data Model

The metadata model needs to be flexible enough to accommodate new entity types specific to AI workflows, such as experiments, model versions, and features. Since 2021, Acryl Data, in partnership with the open-source community, including companies like LinkedIn, Netflix, Pinterest, Expedia and Optum, has been evolving DataHub’s metadata model to include these AI-specific entities while maintaining backward compatibility with existing data entities.

Real-time Metadata Ingestion and Processing

The experimental nature of AI workflows demands real-time metadata ingestion and processing. This aligns well with the third-generation architecture I described in my previous post, where a log-oriented approach enables real-time updates and subscriptions to metadata changes.

Real-time processing allows for immediate visibility into ongoing experiments, model training runs, and production model performance. It enables proactive alerting and facilitates rapid iteration in AI development cycles.

Rich Relationship Modeling

AI workflows introduce complex relationships between entities. For example, an experiment might be linked to multiple datasets, feature sets, and model versions. The metadata platform should be able to capture and query these rich relationships efficiently.

Graph-based data models are particularly well-suited for representing these complex relationships. They allow for efficient traversal and querying of the metadata graph, enabling use cases like impact analysis, lineage tracking, and dependency management.

Scalable Storage and Indexing

With the volume of metadata generated by AI workflows, especially from areas like experiment tracking and model monitoring, the metadata platform needs to scale horizontally in its storage and indexing capabilities.

This might involve adopting distributed storage systems, implementing efficient indexing strategies, and leveraging caching mechanisms to ensure fast query performance even as the metadata volume grows.

API-first Architecture

To integrate seamlessly with various AI tools and platforms, the metadata system should provide rich, well-documented APIs for both reading and writing metadata.

These APIs should support both synchronous (request-response) and asynchronous (event-driven) patterns to accommodate different integration scenarios. They should also be versioned to allow for evolution without breaking existing integrations.

Enhanced Search and Discovery

AI practitioners need powerful search capabilities to find relevant experiments, models, and datasets. This requires not just full-text search, but also the ability to search based on complex criteria like performance metrics or feature importance.

Advanced search capabilities might include:

- Faceted search for filtering on multiple dimensions

- Semantic search for finding related concepts

- Time-based search for tracking changes over time

- Similarity search for finding related experiments or models

Governance and Access Control

With the sensitive nature of some AI models and datasets, the metadata platform needs to provide fine-grained access control and audit logging capabilities.

This includes:

- Role-based access control for different types of metadata

- Attribute-based access policies for fine-grained control

- Comprehensive audit logs of metadata access and changes

- Data classification and handling policies integrated with metadata

Practical Notes on Unifying Data and AI Metadata Management

If you’re a data leader or a data practitioner reading through this blog and wondering how AI and metadata systems will be actually unified, this section is for you. Having lived through a similar journey at LinkedIn, and watching the community adoption of DataHub, I have some good guesses for how this might play out in organizations around the world.

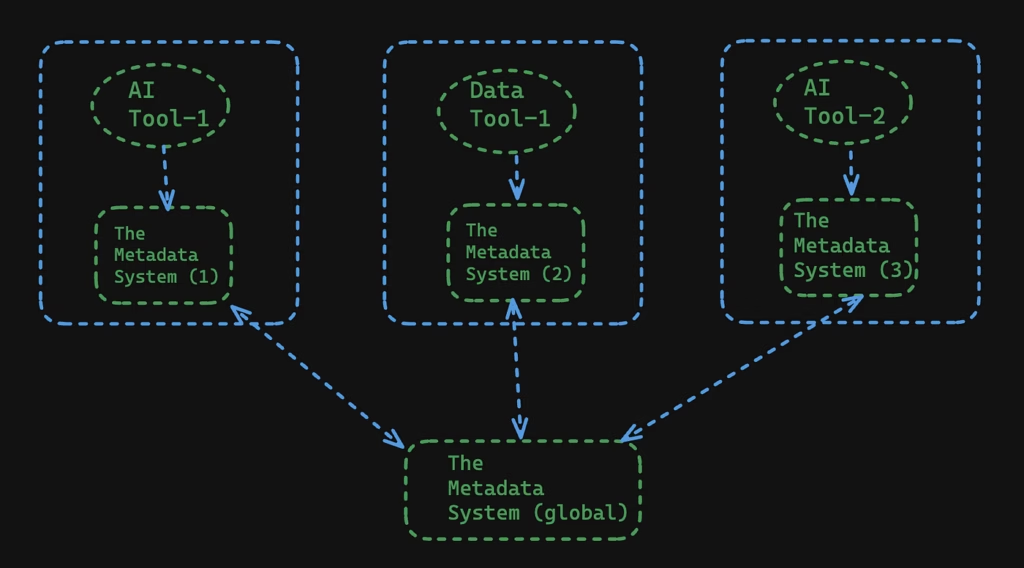

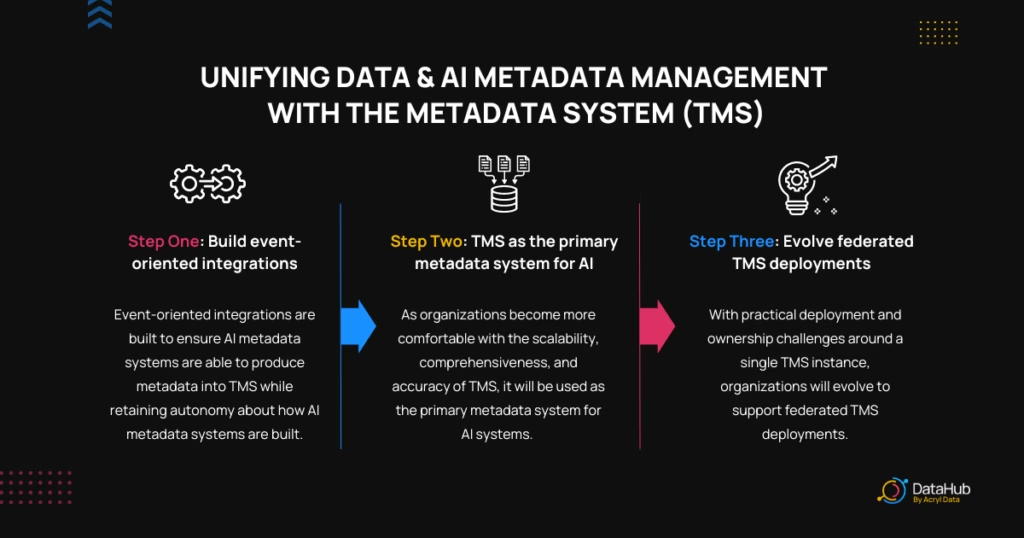

This unification will happen in three steps.

First, we will build event-oriented integrations to ensure that AI metadata systems are able to produce metadata into TMS while still retaining autonomy about how we build an AI metadata system.

Second, as organizations get comfortable with the scalability, comprehensiveness and accuracy of TMS, they will push forward to the second stage — TMS will be used as the primary metadata system for AI systems. This stage will necessarily mean that in-house ML systems or open source ML workflow systems will offer TMS as a supported metadata backend for “primary” metadata storage.

Third, as organizations run into practical deployment and ownership challenges with a single TMS instance, they will evolve to support federated TMS deployments, so that AI metadata systems can be deployed independently from data metadata systems, while sharing the same underlying building blocks, leading to extremely easy inter-connectivity.

The Benefits of Unification

By evolving our metadata platforms to meet these new requirements, we can create a unified system that serves both traditional data and AI workflows effectively. This unified approach offers several benefits:

Holistic Data Governance

By managing metadata for both data and AI in a single platform, organizations can implement consistent governance policies across their entire data ecosystem. This ensures that AI models adhere to the same data quality, privacy, and compliance standards as other data assets.

For example, data lineage can be traced from source systems, through ETL processes, feature engineering steps, model training, and finally to model deployment and monitoring. This end-to-end visibility is crucial for regulatory compliance and data governance initiatives.

Improved Collaboration

A unified platform enables better collaboration between data engineers, data scientists, and ML engineers by providing a common language and reference point for data and AI assets.

Data scientists can easily discover relevant datasets and features for their experiments. ML engineers can understand the provenance of models they’re deploying. Data engineers can see how their pipelines impact downstream AI workflows. This shared context fosters better communication and more efficient workflows.

Enhanced Traceability

With all metadata in one place, it becomes easier to trace the lineage of AI models back to the source data, through feature engineering steps, to the final deployed model.

This traceability is invaluable for debugging issues, understanding the impact of data changes, and ensuring the reproducibility of AI experiments. It also facilitates compliance with regulations that require explainability of AI decisions.

Operational Efficiency

A single platform reduces the overhead of maintaining multiple systems and simplifies the overall data architecture. It eliminates the need for complex integrations between separate data catalogs and ML metadata stores, reducing operational complexity and potential points of failure.

Since 2021, Acryl Data has been leading the charge on driving the DataHub project, collaborating with a vibrant community that includes companies like LinkedIn, Netflix, Pinterest, Optum, and many others. Through this collaborative effort, we’ve been evolving DataHub to meet these new requirements, and we’re seeing significant benefits in how data scientists and ML engineers across various organizations collaborate and manage their AI workflows. The event-sourced architecture and flexible metadata model of DataHub have proven to be robust foundations for incorporating AI-specific metadata, highlighting the capabilities of fourth-generation metadata platforms.

Challenges and Future Directions

While the benefits of a unified metadata platform for data and AI are clear, there are still challenges to overcome.

Performance at Scale

As the volume and complexity of AI metadata grow, maintaining query performance and real-time capabilities becomes increasingly challenging. Future work will need to focus on advanced indexing techniques, efficient graph traversal algorithms, and intelligent caching strategies.

Metadata Quality and Consistency

With metadata coming from various sources and tools in the AI stack, ensuring consistency and quality becomes crucial. Developing robust validation and reconciliation mechanisms for metadata will be an important area of focus.

Privacy and Security

As metadata becomes more comprehensive, it may inadvertently expose sensitive information. Developing privacy-preserving metadata management techniques, such as differential privacy for aggregate metadata, will be essential.

Interoperability

While a unified platform is the goal, the reality is that many organizations will continue to use specialized tools. Improving interoperability and developing standards for metadata exchange between systems will be crucial for creating a cohesive ecosystem.

AI-Assisted Metadata Management

As the volume and complexity of metadata grow, leveraging AI techniques for metadata management itself becomes attractive. This could include automated metadata extraction, intelligent linking of related entities, and AI-powered search and discovery.

Organizational Politics and Conway’s Law

People can be tricky. Some might resist change, guard their data turf, or struggle to work together. Getting everyone on board and collaborating will take effort and good leadership. This probably deserves a blog post on its own, but if you can create win-win situations (for example, “We can all meet compliance faster with a unified metadata store” or “Better interoperability means we can satisfy more stakeholders together”) or use organization-wide mandates (e.g., leveraging company initiatives everyone cares about: compliance requirements, productivity goals, or cost-saving drives), you can break through these log jams and create powerful motivators for adopting a unified system.

Conclusion

As AI continues to permeate every aspect of our data ecosystems, our metadata platforms need to evolve to meet new challenges. The emergence of fourth-generation metadata platforms, exemplified by the recent evolution of DataHub, represents a significant step forward. These platforms build on the principles of third-generation architectures while incorporating new capabilities specifically designed to support AI workflows.

The journey of evolving our metadata platforms for AI is ongoing, and I’m excited to see how the community continues to innovate in this space. Since 2021, Acryl Data, in collaboration with the open-source community, has been at the forefront of evolving DataHub to meet these emerging needs. This collaborative effort, involving companies like LinkedIn, Netflix, Pinterest, Optum, and many others, is driving the development of truly fourth-generation metadata platforms.

As always, I encourage you to share your experiences and thoughts on this topic. How are you managing AI metadata in your organization? What challenges have you encountered, and what solutions have you found effective? Let’s continue this conversation and work together to build the next generation of metadata platforms that can truly unlock the potential of our data and AI ecosystems.

The future of metadata management is not just about cataloging data assets; it’s about creating a comprehensive real-time knowledge graph that connects data, models, experiments, and business outcomes. By embracing fourth-generation metadata platforms, we can enable a new era of data-driven and AI-powered innovation.

References:

[1] Gartner, J. (2005). The road to convergence. IEEE Spectrum, 42(10), 60–65.

[2] Zaballos, A. G., Vallejo, J. C., & Selga, J. M. (2011). Heterogeneous communication architecture for the smart grid. IEEE Network, 25(5), 30–37.

[3] Manjunath, R. L., & Jagadeesh, K. S. (2016). Core banking solutions: The cutting edge technology in banking sector. International Journal of Advanced Research in Computer Science, 7(6).

[4] Kauffman, R. J., & Weber, B. W. (2002). What drives global ICT adoption? Analysis and research directions. Electronic Commerce Research and Applications, 1(3–4), 139–164.

[5] Bharadwaj, A., El Sawy, O. A., Pavlou, P. A., & Venkatraman, N. (2013). Digital business strategy: toward a next generation of insights. MIS Quarterly, 37(2), 471–482.

[6] Polyzotis, N., Roy, S., Whang, S. E., & Zinkevich, M. (2017). Data management challenges in production machine learning. In Proceedings of the 2017 ACM International Conference on Management of Data (pp. 1723–1726).

[7] Miao, H., Li, A., Davis, L. S., & Deshpande, A. (2017). Towards unified data and lifecycle management for deep learning. In 2017 IEEE 33rd International Conference on Data Engineering (ICDE) (pp. 571–582). IEEE.

[8] Hellerstein, J. M., et al. (2017). Ground: A Data Context Service. In CIDR.

Recommended Next Reads